diff options

| author | Daniel Baumann <daniel.baumann@progress-linux.org> | 2024-04-13 12:04:41 +0000 |

|---|---|---|

| committer | Daniel Baumann <daniel.baumann@progress-linux.org> | 2024-04-13 12:04:41 +0000 |

| commit | 975f66f2eebe9dadba04f275774d4ab83f74cf25 (patch) | |

| tree | 89bd26a93aaae6a25749145b7e4bca4a1e75b2be /ansible_collections/ovirt | |

| parent | Initial commit. (diff) | |

| download | ansible-975f66f2eebe9dadba04f275774d4ab83f74cf25.tar.xz ansible-975f66f2eebe9dadba04f275774d4ab83f74cf25.zip | |

Adding upstream version 7.7.0+dfsg.upstream/7.7.0+dfsg

Signed-off-by: Daniel Baumann <daniel.baumann@progress-linux.org>

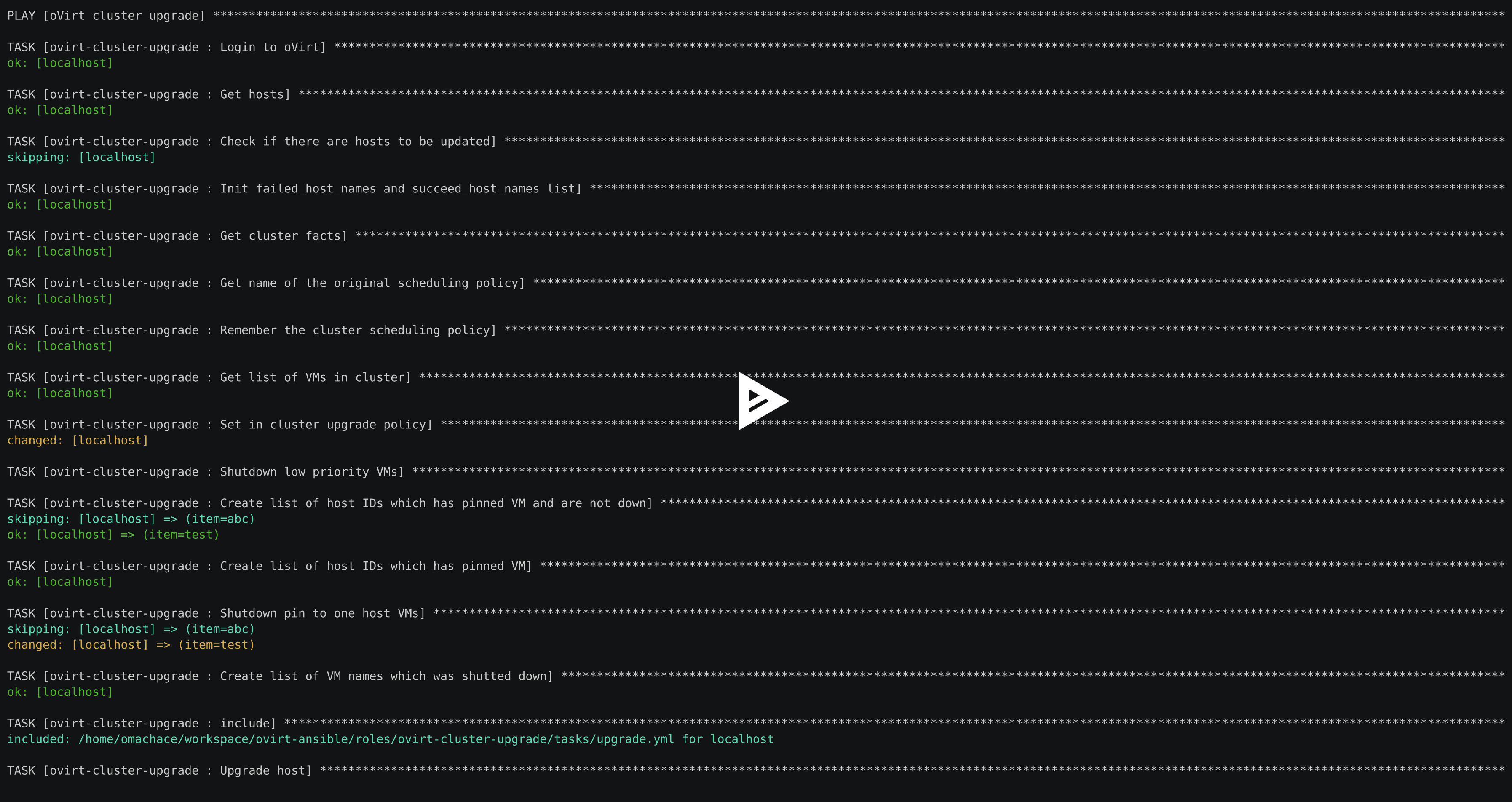

Diffstat (limited to 'ansible_collections/ovirt')

386 files changed, 43377 insertions, 0 deletions