diff options

| author | Daniel Baumann <daniel.baumann@progress-linux.org> | 2022-08-12 07:26:17 +0000 |

|---|---|---|

| committer | Daniel Baumann <daniel.baumann@progress-linux.org> | 2022-08-12 07:26:17 +0000 |

| commit | 7877a98bd9c00db5e81dd2f8c734cba2bab20be7 (patch) | |

| tree | d18b767250f7c7ced9b8abe2ece784ac1fe24d3e /docs/guides | |

| parent | Releasing debian version 1.35.1-2. (diff) | |

| download | netdata-7877a98bd9c00db5e81dd2f8c734cba2bab20be7.tar.xz netdata-7877a98bd9c00db5e81dd2f8c734cba2bab20be7.zip | |

Merging upstream version 1.36.0.

Signed-off-by: Daniel Baumann <daniel.baumann@progress-linux.org>

Diffstat (limited to 'docs/guides')

| -rw-r--r-- | docs/guides/deploy/ansible.md | 2 | ||||

| -rw-r--r-- | docs/guides/longer-metrics-storage.md | 218 | ||||

| -rw-r--r-- | docs/guides/monitor/anomaly-detection.md | 271 | ||||

| -rw-r--r-- | docs/guides/troubleshoot/troubleshooting-agent-with-cloud-connection.md | 117 |

4 files changed, 263 insertions, 345 deletions

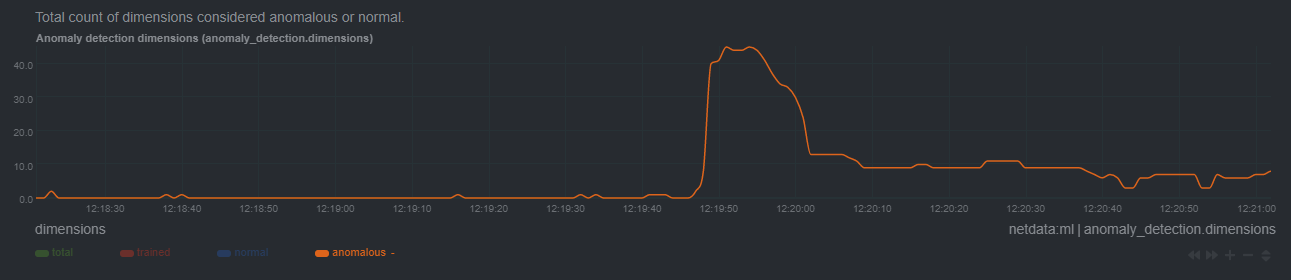

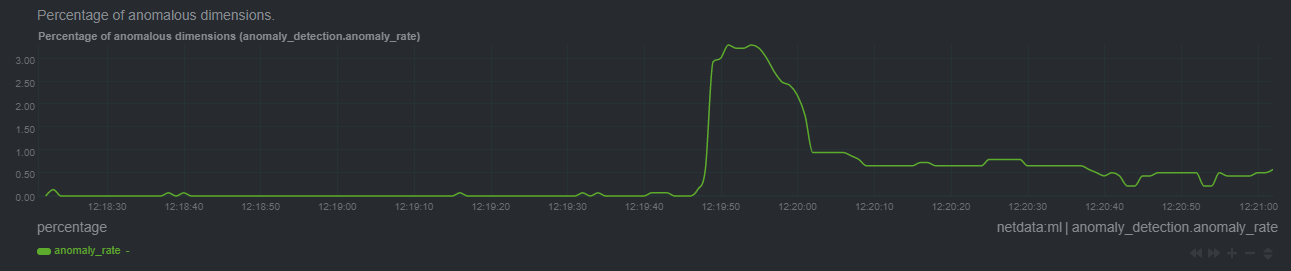

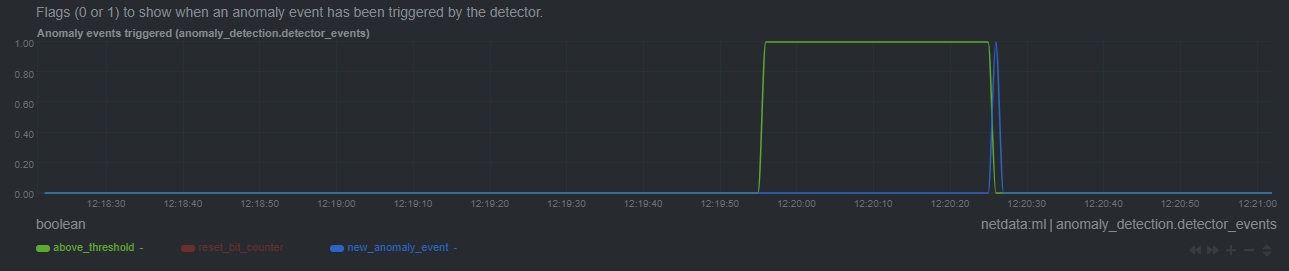

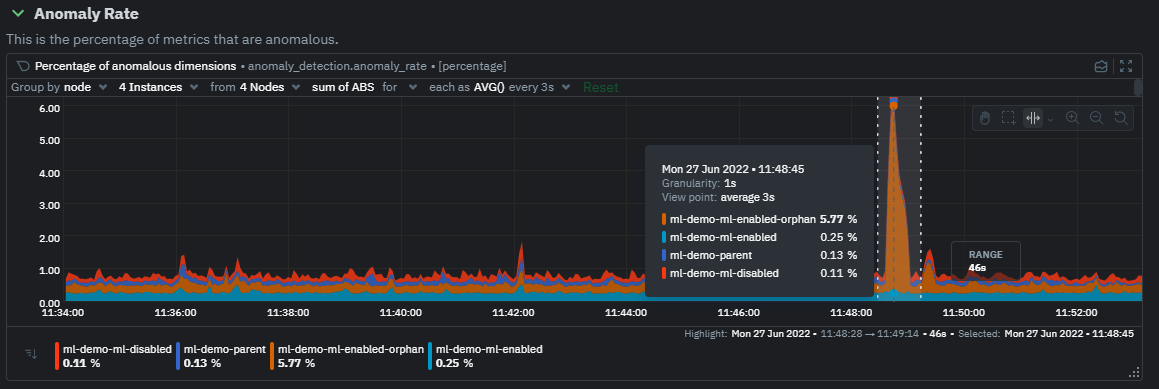

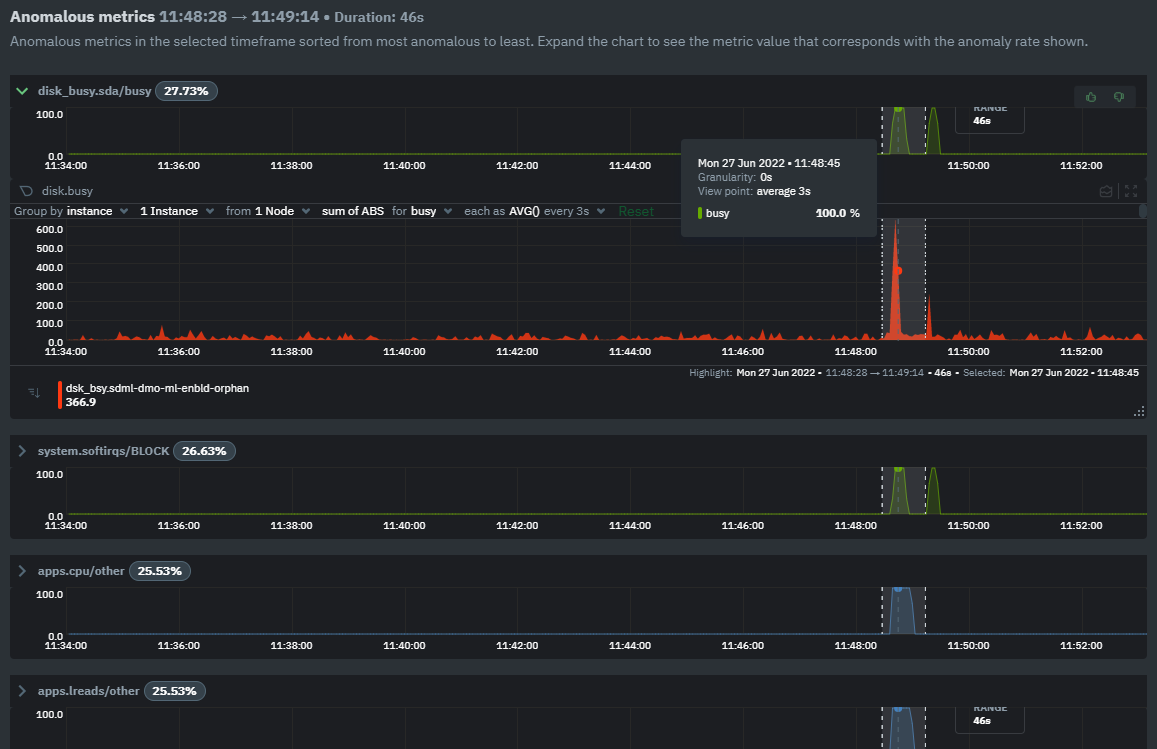

diff --git a/docs/guides/deploy/ansible.md b/docs/guides/deploy/ansible.md index 7e5532b62..35c946021 100644 --- a/docs/guides/deploy/ansible.md +++ b/docs/guides/deploy/ansible.md @@ -56,6 +56,8 @@ mv community/netdata-agent-deployment/ansible-quickstart . rm -rf community ``` +Or if you don't want to clone the entire repository, use the [gitzip browser extension](https://gitzip.org/) to get the netdata-agent-deployment directory as a zip file. + Next, `cd` into the Ansible directory. ```bash diff --git a/docs/guides/longer-metrics-storage.md b/docs/guides/longer-metrics-storage.md index 85edb55ee..8ccd9585f 100644 --- a/docs/guides/longer-metrics-storage.md +++ b/docs/guides/longer-metrics-storage.md @@ -1,160 +1,158 @@ <!-- -title: "Change how long Netdata stores metrics" -description: "With a single configuration change, the Netdata Agent can store days, weeks, or months of metrics at its famous per-second granularity." +title: "Netdata Longer Metrics Retention" +description: "" custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/longer-metrics-storage.md --> -# Change how long Netdata stores metrics +# Netdata Longer Metrics Retention -Netdata helps you collect thousands of system and application metrics every second, but what about storing them for the -long term? +Metrics retention affects 3 parameters on the operation of a Netdata Agent: -Many people think Netdata can only store about an hour's worth of real-time metrics, but that's simply not true any -more. With the right settings, Netdata is quite capable of efficiently storing hours or days worth of historical, -per-second metrics without having to rely on an [exporting engine](/docs/export/external-databases.md). +1. The disk space required to store the metrics. +2. The memory the Netdata Agent will require to have that retention available for queries. +3. The CPU resources that will be required to query longer time-frames. -This guide gives two options for configuring Netdata to store more metrics. **We recommend the default [database -engine](#using-the-database-engine)**, but you can stick with or switch to the round-robin database if you prefer. +As retention increases, the resources required to support that retention increase too. -Let's get started. +Since Netdata Agents usually run at the edge, inside production systems, Netdata Agent **parents** should be considered. When having a **parent - child** setup, the child (the Netdata Agent running on a production system) delegates all its functions, including longer metrics retention and querying, to the parent node that can dedicate more resources to this task. A single Netdata Agent parent can centralize multiple children Netdata Agents (dozens, hundreds, or even thousands depending on its available resources). -## Using the database engine -The database engine uses RAM to store recent metrics while also using a "spill to disk" feature that takes advantage of -available disk space for long-term metrics storage. This feature of the database engine allows you to store a much -larger dataset than your system's available RAM. +## Ephemerality of metrics -The database engine is currently the default method of storing metrics, but if you're not sure which database you're -using, check out your `netdata.conf` file and look for the `memory mode` setting: +The ephemerality of metrics plays an important role in retention. In environments where metrics stop being collected and new metrics are constantly being generated, we are interested about 2 parameters: -```conf -[global] - memory mode = dbengine -``` - -If `memory mode` is set to anything but `dbengine`, change it and restart Netdata using the standard command for -restarting services on your system. You're now using the database engine! +1. The **expected concurrent number of metrics** as an average for the lifetime of the database. + This affects mainly the storage requirements. -What makes the database engine efficient? While it's structured like a traditional database, the database engine splits -data between RAM and disk. The database engine caches and indexes data on RAM to keep memory usage low, and then -compresses older metrics onto disk for long-term storage. +2. The **expected total number of unique metrics** for the lifetime of the database. + This affects mainly the memory requirements for having all these metrics indexed and available to be queried. -When the Netdata dashboard queries for historical metrics, the database engine will use its cache, stored in RAM, to -return relevant metrics for visualization in charts. +## Granularity of metrics -Now, given that the database engine uses _both_ RAM and disk, there are two other settings to consider: `page cache -size` and `dbengine multihost disk space`. +The granularity of metrics (the frequency they are collected and stored, i.e. their resolution) is significantly affecting retention. -```conf -[global] - page cache size = 32 - dbengine multihost disk space = 256 -``` +Lowering the granularity from per second to every two seconds, will double their retention and half the CPU requirements of the Netdata Agent, without affecting disk space or memory requirements. -`page cache size` sets the maximum amount of RAM (in MiB) the database engine will use for caching and indexing. -`dbengine multihost disk space` sets the maximum disk space (again, in MiB) the database engine will use for storing -compressed metrics. The default settings retain about two day's worth of metrics on a system collecting 2,000 metrics -every second. +## Which database mode to use -[**See our database engine -calculator**](/docs/store/change-metrics-storage.md#calculate-the-system-resources-ram-disk-space-needed-to-store-metrics) -to help you correctly set `dbengine multihost disk space` based on your needs. The calculator gives an accurate estimate -based on how many child nodes you have, how many metrics your Agent collects, and more. +Netdata Agents support multiple database modes. -With the database engine active, you can back up your `/var/cache/netdata/dbengine/` folder to another location for -redundancy. +The default mode `[db].mode = dbengine` has been designed to scale for longer retentions. -Now that you know how to switch to the database engine, let's cover the default round-robin database for those who -aren't ready to make the move. +The other available database modes are designed to minimize resource utilization and should usually be considered on **parent - child** setups at the children side. -## Using the round-robin database +So, -In previous versions, Netdata used a round-robin database to store 1 hour of per-second metrics. +* On a single node setup, use `[db].mode = dbengine` to increase retention. +* On a **parent - child** setup, use `[db].mode = dbengine` on the parent to increase retention and a more resource efficient mode (like `save`, `ram` or `none`) for the child to minimize resources utilization. -To see if you're still using this database, or if you would like to switch to it, open your `netdata.conf` file and see -if `memory mode` option is set to `save`. +To use `dbengine`, set this in `netdata.conf` (it is the default): -```conf -[global] - memory mode = save ``` +[db] + mode = dbengine +``` + +## Tiering -If `memory mode` is set to `save`, then you're using the round-robin database. If so, the `history` option is set to -`3600`, which is the equivalent to 3,600 seconds, or one hour. +`dbengine` supports tiering. Tiering allows having up to 3 versions of the data: -To increase your historical metrics, you can increase `history` to the number of seconds you'd like to store: +1. Tier 0 is the high resolution data. +2. Tier 1 is the first tier that samples data every 60 data collections of Tier 0. +3. Tier 2 is the second tier that samples data every 3600 data collections of Tier 0 (60 of Tier 1). -```conf -[global] - # 2 hours = 2 * 60 * 60 = 7200 seconds - history = 7200 - # 4 hours = 4 * 60 * 60 = 14440 seconds - history = 14440 - # 24 hours = 24 * 60 * 60 = 86400 seconds - history = 86400 +To enable tiering set `[db].storage tiers` in `netdata.conf` (the default is 1, to enable only Tier 0): + +``` +[db] + mode = dbengine + storage tiers = 3 ``` -And so on. +## Disk space requirements -Next, check to see how many metrics Netdata collects on your system, and how much RAM that uses. Visit the Netdata -dashboard and look at the bottom-right corner of the interface. You'll find a sentence similar to the following: +Netdata Agents require about 1 bytes on disk per database point on Tier 0 and 4 times more on higher tiers (Tier 1 and 2). They require 4 times more storage per point compared to Tier 0, because for every point higher tiers store `min`, `max`, `sum`, `count` and `anomaly rate` (the values are 5, but they require 4 times the storage because `count` and `anomaly rate` are 16-bit integers). The `average` is calculated on the fly at query time using `sum / count`. -> Every second, Netdata collects 1,938 metrics, presents them in 299 charts and monitors them with 81 alarms. Netdata is -> using 25 MB of memory on **netdata-linux** for 1 hour, 6 minutes and 36 seconds of real-time history. +### Tier 0 - per second for a week -On this desktop system, using a Ryzen 5 1600 and 16GB of RAM, the round-robin databases uses 25 MB of RAM to store just -over an hour's worth of data for nearly 2,000 metrics. +For 2000 metrics, collected every second and retained for a week, Tier 0 needs: 1 byte x 2000 metrics x 3600 secs per hour x 24 hours per day x 7 days per week = 1100MB. -To increase the `history` option, you need to edit your `netdata.conf` file and increase the `history` setting. In most -installations, you'll find it at `/etc/netdata/netdata.conf`, but some operating systems place it at -`/opt/netdata/etc/netdata/netdata.conf`. +The setting to control this is in `netdata.conf`: -Use `/etc/netdata/edit-config netdata.conf`, or your favorite text editor, to replace `3600` with the number of seconds -you'd like to store. +``` +[db] + mode = dbengine + + # per second data collection + update every = 1 + + # enable only Tier 0 + storage tiers = 1 + + # Tier 0, per second data for a week + dbengine multihost disk space MB = 1100 +``` -You should base this number on two things: How much history you need for your use case, and how much RAM you're willing -to dedicate to Netdata. +By setting it to `1100` and restarting the Netdata Agent, this node will start maintaining about a week of data. But pay attention to the number of metrics. If you have more than 2000 metrics on a node, or you need more that a week of high resolution metrics, you may need to adjust this setting accordingly. -> Take care when you change the `history` option on production systems. Netdata is configured to stop its process if -> your system starts running out of RAM, but you can never be too careful. Out of memory situations are very bad. +### Tier 1 - per minute for a month -How much RAM will a longer history use? Let's use a little math. +Tier 1 is by default sampling the data every 60 points of Tier 0. If Tier 0 is per second, then Tier 1 is per minute. -The round-robin database needs 4 bytes for every value Netdata collects. If Netdata collects metrics every second, -that's 4 bytes, per second, per metric. +Tier 1 needs 4 times more storage per point compared to Tier 0. So, for 2000 metrics, with per minute resolution, retained for a month, Tier 1 needs: 4 bytes x 2000 metrics x 60 minutes per hour x 24 hours per day x 30 days per month = 330MB. -```text -4 bytes * X seconds * Y metrics = RAM usage in bytes +Do this in `netdata.conf`: + +``` +[db] + mode = dbengine + + # per second data collection + update every = 1 + + # enable only Tier 0 and Tier 1 + storage tiers = 2 + + # Tier 0, per second data for a week + dbengine multihost disk space MB = 1100 + + # Tier 1, per minute data for a month + dbengine tier 1 multihost disk space MB = 330 ``` -Let's assume your system collects 1,000 metrics per second. +Once `netdata.conf` is edited, the Netdata Agent needs to be restarted for the changes to take effect. -```text -4 bytes * 3600 seconds * 1,000 metrics = 14400000 bytes = 14.4 MB RAM -``` +### Tier 2 - per hour for a year -With that formula, you can calculate the RAM usage for much larger history settings. - -```conf -# 2 hours at 1,000 metrics per second -4 bytes * 7200 seconds * 1,000 metrics = 28800000 bytes = 28.8 MB RAM -# 2 hours at 2,000 metrics per second -4 bytes * 7200 seconds * 2,000 metrics = 57600000 bytes = 57.6 MB RAM -# 4 hours at 2,000 metrics per second -4 bytes * 14440 seconds * 2,000 metrics = 115520000 bytes = 115.52 MB RAM -# 24 hours at 1,000 metrics per second -4 bytes * 86400 seconds * 1,000 metrics = 345600000 bytes = 345.6 MB RAM -``` +Tier 2 is by default sampling data every 3600 points of Tier 0 (60 of Tier 1). If Tier 0 is per second, then Tier 2 is per hour. -## What's next? +The storage requirements are the same to Tier 1. -Now that you have either configured database engine or round-robin database engine to store more metrics, you'll -probably want to see it in action! +For 2000 metrics, with per hour resolution, retained for a year, Tier 2 needs: 4 bytes x 2000 metrics x 24 hours per day x 365 days per year = 67MB. + +Do this in `netdata.conf`: + +``` +[db] + mode = dbengine + + # per second data collection + update every = 1 + + # enable only Tier 0 and Tier 1 + storage tiers = 3 + + # Tier 0, per second data for a week + dbengine multihost disk space MB = 1100 + + # Tier 1, per minute data for a month + dbengine tier 1 multihost disk space MB = 330 + + # Tier 2, per hour data for a year + dbengine tier 2 multihost disk space MB = 67 +``` -For more information about how to pan charts to view historical metrics, see our documentation on [using -charts](/web/README.md#using-charts). +Once `netdata.conf` is edited, the Netdata Agent needs to be restarted for the changes to take effect. -And if you'd now like to reduce Netdata's resource usage, view our [performance -guide](/docs/guides/configure/performance.md) for our best practices on optimization. diff --git a/docs/guides/monitor/anomaly-detection.md b/docs/guides/monitor/anomaly-detection.md index 7b4388a05..e98c5c02e 100644 --- a/docs/guides/monitor/anomaly-detection.md +++ b/docs/guides/monitor/anomaly-detection.md @@ -5,270 +5,71 @@ image: /img/seo/guides/monitor/anomaly-detection.png author: "Andrew Maguire" author_title: "Analytics & ML Lead" author_img: "/img/authors/andy-maguire.jpg" -custom_edit_url: https://github.com/netdata/netdata/edit/master/ml/README.md +custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/guides/monitor/anomaly-detection.md --> -# Machine learning (ML) powered anomaly detection -## Overview - -As of [`v1.32.0`](https://github.com/netdata/netdata/releases/tag/v1.32.0), Netdata comes with some ML powered [anomaly detection](https://en.wikipedia.org/wiki/Anomaly_detection) capabilities built into it and available to use out of the box, with minimal configuration required. - -🚧 **Note**: This functionality is still under active development and considered experimental. Changes might cause the feature to break. We dogfood it internally and among early adopters within the Netdata community to build the feature. If you would like to get involved and help us with some feedback, email us at analytics-ml-team@netdata.cloud or come join us in the [🤖-ml-powered-monitoring](https://discord.gg/4eRSEUpJnc) channel of the Netdata discord. - -Once ML is enabled, Netdata will begin training a model for each dimension. By default this model is a [k-means clustering](https://en.wikipedia.org/wiki/K-means_clustering) model trained on the most recent 4 hours of data. Rather than just using the most recent value of each raw metric, the model works on a preprocessed ["feature vector"](#feature-vector) of recent smoothed and differenced values. This should enable the model to detect a wider range of potentially anomalous patterns in recent observations as opposed to just point anomalies like big spikes or drops. ([This infographic](https://user-images.githubusercontent.com/2178292/144414415-275a3477-5b47-43d6-8959-509eb48ebb20.png) shows some different types of anomalies.) - -The sections below will introduce some of the main concepts: -- anomaly bit -- anomaly score -- anomaly rate -- anomaly detector - -Additional explanations and details can be found in the [Glossary](#glossary) and [Notes](#notes) at the bottom of the page. - -### Anomaly Bit - (100 = Anomalous, 0 = Normal) - -Once each model is trained, Netdata will begin producing an ["anomaly score"](#anomaly-score) at each time step for each dimension. This ["anomaly score"](#anomaly-score) is essentially a distance measure to the trained cluster centers of the model (by default each model has k=2, so two cluster centers are learned). More anomalous looking data should be more distant to those cluster centers. If this ["anomaly score"](#anomaly-score) is sufficiently large, this is a sign that the recent raw values of the dimension could potentially be anomalous. By default, "sufficiently large" means that the distance is in the 99th percentile or above of all distances observed during training or, put another way, it has to be further away than the furthest 1% of the data used during training. Once this threshold is passed, the ["anomaly bit"](#anomaly-bit) corresponding to that dimension is set to 100 to flag it as anomalous, otherwise it would be left at 0 to signal normal data. - -What this means is that in addition to the raw value of each metric, Netdata now also stores an ["anomaly bit"](#anomaly-bit) that is either 100 (anomalous) or 0 (normal). Importantly, this is achieved without additional storage overhead due to how the anomaly bit has been implemented within the existing internal Netdata storage representation. - -This ["anomaly bit"](#anomaly-bit) is exposed via the `anomaly-bit` key that can be passed to the `options` param of the `/api/v1/data` REST API. - -For example, here are some recent raw dimension values for `system.ip` on our [london](http://london.my-netdata.io/) demo server: - -[`https://london.my-netdata.io/api/v1/data?chart=system.ip`](https://london.my-netdata.io/api/v1/data?chart=system.ip) - -``` -{ - "labels": ["time", "received", "sent"], - "data": - [ - [ 1638365672, 54.84098, -76.70201], - [ 1638365671, 124.4328, -309.7543], - [ 1638365670, 123.73152, -167.9056], - ... - ] -} -``` - -And if we add the `&options=anomaly-bit` params, we can see the "anomaly bit" value corresponding to each raw dimension value: - -[`https://london.my-netdata.io/api/v1/data?chart=system.ip&options=anomaly-bit`](https://london.my-netdata.io/api/v1/data?chart=system.ip&options=anomaly-bit) - -``` -{ - "labels": ["time", "received", "sent"], - "data": - [ - [ 1638365672, 0, 0], - [ 1638365671, 0, 0], - [ 1638365670, 0, 0], - ... - ] -} -``` -In this example, the dimensions "received" and "sent" didn't show any abnormal behavior, so the anomaly bit is zero. -Under normal circumstances, the anomaly bit will mostly be 0. However, there can be random fluctuations setting the anomaly to 100, although this very much depends on the nature of the dimension in question. - -### Anomaly Rate - average(anomaly bit) - -Once all models have been trained, we can think of the Netdata dashboard as essentially a big matrix or table of 0's and 100's. If we consider this "anomaly bit"-based representation of the state of the node, we can now think about how we might detect overall node level anomalies. The figure below illustrates the main ideas. - -``` - dimensions -time d1 d2 d3 d4 d5 NAR - 1 0 0 0 0 0 0% - 2 0 0 0 0 100 20% - 3 0 0 0 0 0 0% - 4 0 100 0 0 0 20% - 5 100 0 0 0 0 20% - 6 0 100 100 0 100 60% - 7 0 100 0 100 0 40% - 8 0 0 0 0 100 20% - 9 0 0 100 100 0 40% - 10 0 0 0 0 0 0% - -DAR 10% 30% 20% 20% 30% 22% NAR_t1-t10 - -DAR = Dimension Anomaly Rate -NAR = Node Anomaly Rate -NAR_t1-t10 = Node Anomaly Rate over t1 to t10 -``` - -To work out an ["anomaly rate"](#anomaly-rate), we can just average a row or a column in any direction. For example, if we were to just average along a row then this would be the ["node anomaly rate"](#node-anomaly-rate) (all dimensions) at time t. Likewise if we averaged a column then we would have the ["dimension anomaly rate"](#dimension-anomaly-rate) for each dimension over the time window t=1-10. Extending this idea, we can work out an overall ["anomaly rate"](#anomaly-rate) for the whole matrix or any subset of it we might be interested in. - -### Anomaly Detector - Node level anomaly events - -An ["anomaly detector"](#anomaly-detector) looks at all anomaly bits of a node. Netdata's anomaly detector produces an ["anomaly event"](#anomaly-event) when a the percentage of anomaly bits is high enough for a persistent amount of time. This anomaly event signals that there was sufficient evidence among all the anomaly bits that some strange behavior might have been detected in a more global sense across the node. -Essentially if the ["Node Anomaly Rate"](#node-anomaly-rate) (NAR) passes a defined threshold and stays above that threshold for a persistent amount of time, a "Node [Anomaly Event](#anomaly-event)" will be triggered. - -These anomaly events are currently exposed via `/api/v1/anomaly_events` - -**Note**: Clicking the link below will likely return an empty list of `[]`. This is the response when no anomaly events exist in the specified range. The example response below is illustrative of what the response would be when one or more anomaly events exist within the range of `after` to `before`. - -https://london.my-netdata.io/api/v1/anomaly_events?after=1638365182000&before=1638365602000 - -If an event exists within the window, the result would be a list of start and end times. - -``` -[ - [ - 1638367788, - 1638367851 - ] -] -``` +## Overview -Information about each anomaly event can then be found at the `/api/v1/anomaly_event_info` endpoint (making sure to pass the `after` and `before` params): +As of [`v1.32.0`](https://github.com/netdata/netdata/releases/tag/v1.32.0), Netdata comes with some ML powered [anomaly detection](https://en.wikipedia.org/wiki/Anomaly_detection) capabilities built into it and available to use out of the box, with zero configuration required (ML was enabled by default in `v1.35.0-29-nightly` in [this PR](https://github.com/netdata/netdata/pull/13158), previously it required a one line config change). -**Note**: If you click the below url you will get a `null` since no such anomaly event exists as the response is just an illustrative example taken from a node that did have such an anomaly event. +This means that in addition to collecting raw value metrics, the Netdata agent will also produce an [`anomaly-bit`](https://learn.netdata.cloud/docs/agent/ml#anomaly-bit---100--anomalous-0--normal) every second which will be `100` when recent raw metric values are considered anomalous by Netdata and `0` when they look normal. Once we aggregate beyond one second intervals this aggregated `anomaly-bit` becomes an ["anomaly rate"](https://learn.netdata.cloud/docs/agent/ml#anomaly-rate---averageanomaly-bit). -https://london.my-netdata.io/api/v1/anomaly_event_info?after=1638367788&before=1638367851 +To be as concrete as possible, the below api call shows how to access the raw anomaly bit of the `system.cpu` chart from the [london.my-netdata.io](https://london.my-netdata.io) Netdata demo server. Passing `options=anomaly-bit` returns the anomay bit instead of the raw metric value. ``` -[ - [ - 0.66, - "netdata.response_time|max" - ], - [ - 0.63, - "netdata.response_time|average" - ], - [ - 0.54, - "netdata.requests|requests" - ], - ... +https://london.my-netdata.io/api/v1/data?chart=system.cpu&options=anomaly-bit ``` -The query returns a list of dimension anomaly rates for all dimensions that were considered part of the detected anomaly event. - -**Note**: We plan to build additional anomaly detection and exploration features into both Netdata Agent and Netdata Cloud. The current endpoints are still under active development to power the upcoming features. - -## Configuration - -To enable anomaly detection: -1. Find and open the Netdata configuration file `netdata.conf`. -2. In the `[ml]` section, set `enabled = yes`. -3. Restart netdata (typically `sudo systemctl restart netdata`). - -**Note**: If you would like to learn more about configuring Netdata please see [the configuration guide](https://learn.netdata.cloud/guides/step-by-step/step-04). - -Below is a list of all the available configuration params and their default values. +If we aggregate the above to just 1 point by adding `points=1` we get an "[Anomaly Rate](https://learn.netdata.cloud/docs/agent/ml#anomaly-rate---averageanomaly-bit)": ``` -[ml] - # enabled = no - # maximum num samples to train = 14400 - # minimum num samples to train = 3600 - # train every = 3600 - # num samples to diff = 1 - # num samples to smooth = 3 - # num samples to lag = 5 - # maximum number of k-means iterations = 1000 - # dimension anomaly score threshold = 0.99 - # host anomaly rate threshold = 0.01000 - # minimum window size = 30.00000 - # maximum window size = 600.00000 - # idle window size = 30.00000 - # window minimum anomaly rate = 0.25000 - # anomaly event min dimension rate threshold = 0.05000 - # hosts to skip from training = !* - # charts to skip from training = !system.* !cpu.* !mem.* !disk.* !disk_* !ip.* !ipv4.* !ipv6.* !net.* !net_* !netfilter.* !services.* !apps.* !groups.* !user.* !ebpf.* !netdata.* * +https://london.my-netdata.io/api/v1/data?chart=system.cpu&options=anomaly-bit&points=1 ``` -### Descriptions (min/max) - -- `enabled`: `yes` to enable, `no` to disable. -- `maximum num samples to train`: (`3600`/`21600`) This is the maximum amount of time you would like to train each model on. For example, the default of `14400` trains on the preceding 4 hours of data, assuming an `update every` of 1 second. -- `minimum num samples to train`: (`900`/`21600`) This is the minimum amount of data required to be able to train a model. For example, the default of `3600` implies that once at least 1 hour of data is available for training, a model is trained, otherwise it is skipped and checked again at the next training run. -- `train every`: (`1800`/`21600`) This is how often each model will be retrained. For example, the default of `3600` means that each model is retrained every hour. Note: The training of all models is spread out across the `train every` period for efficiency, so in reality, it means that each model will be trained in a staggered manner within each `train every` period. -- `num samples to diff`: (`0`/`1`) This is a `0` or `1` to determine if you want the model to operate on differences of the raw data or just the raw data. For example, the default of `1` means that we take differences of the raw values. Using differences is more general and works on dimensions that might naturally tend to have some trends or cycles in them that is normal behavior to which we don't want to be too sensitive. -- `num samples to smooth`: (`0`/`5`) This is a small integer that controls the amount of smoothing applied as part of the feature processing used by the model. For example, the default of `3` means that the rolling average of the last 3 values is used. Smoothing like this helps the model be a little more robust to spiky types of dimensions that naturally "jump" up or down as part of their normal behavior. -- `num samples to lag`: (`0`/`5`) This is a small integer that determines how many lagged values of the dimension to include in the feature vector. For example, the default of `5` means that in addition to the most recent (by default, differenced and smoothed) value of the dimension, the feature vector will also include the 5 previous values too. Using lagged values in our feature representation allows the model to work over strange patterns over recent values of a dimension as opposed to just focusing on if the most recent value itself is big or small enough to be anomalous. -- `maximum number of k-means iterations`: This is a parameter that can be passed to the model to limit the number of iterations in training the k-means model. Vast majority of cases can ignore and leave as default. -- `dimension anomaly score threshold`: (`0.01`/`5.00`) This is the threshold at which an individual dimension at a specific timestep is considered anomalous or not. For example, the default of `0.99` means that a dimension with an anomaly score of 99% or higher is flagged as anomalous. This is a normalized probability based on the training data, so the default of 99% means that anything that is as strange (based on distance measure) or more strange as the most strange 1% of data observed during training will be flagged as anomalous. If you wanted to make the anomaly detection on individual dimensions more sensitive you could try a value like `0.90` (90%) or to make it less sensitive you could try `1.5` (150%). -- `host anomaly rate threshold`: (`0.0`/`1.0`) This is the percentage of dimensions (based on all those enabled for anomaly detection) that need to be considered anomalous at specific timestep for the host itself to be considered anomalous. For example, the default value of `0.01` means that if more than 1% of dimensions are anomalous at the same time then the host itself is considered in an anomalous state. -- `minimum window size`: The Netdata "Anomaly Detector" logic works over a rolling window of data. This parameter defines the minimum length of window to consider. If over this window the host is in an anomalous state then an anomaly detection event will be triggered. For example, the default of `30` means that the detector will initially work over a rolling window of 30 seconds. Note: The length of this window will be dynamic once an anomaly event has been triggered such that it will expand as needed until either the max length of an anomaly event is hit or the host settles back into a normal state with sufficiently decreased host level anomaly states in the rolling window. Note: If you wanted to adjust the higher level anomaly detector behavior then this is one parameter you might adjust to see the impact of on anomaly detection events. -- `maximum window size`: This parameter defines the maximum length of window to consider. If an anomaly event reaches this size, it will be closed. This is to provide an upper bound on the length of an anomaly event and cost of the anomaly detector logic for that event. -- `window minimum anomaly rate`: (`0.0`/`1.0`) This parameter corresponds to a threshold on the percentage of time in the rolling window that the host was considered in an anomalous state. For example, the default of `0.25` means that if the host is in an anomalous state for 25% of more of the rolling window then and anomaly event will be triggered or extended if one is already active. Note: If you want to make the anomaly detector itself less sensitive, you can adjust this value to something like `0.75` which would mean the host needs to be much more consistently in an anomalous state to trigger an anomaly detection event. Likewise, a lower value like `0.1` would make the anomaly detector more sensitive. -- `anomaly event min dimension rate threshold`: (`0.0`/`1.0`) This is a parameter that helps filter out irrelevant dimensions from anomaly events. For example, the default of `0.05` means that only dimensions that were considered anomalous for at least 5% of the anomaly event itself will be included in that anomaly event. The idea here is to just include dimensions that were consistently anomalous as opposed to those that may have just randomly happened to be anomalous at the same time. -- `hosts to skip from training`: This parameter allows you to turn off anomaly detection for any child hosts on a parent host by defining those you would like to skip from training here. For example, a value like `dev-*` skips all hosts on a parent that begin with the "dev-" prefix. The default value of `!*` means "don't skip any". -- `charts to skip from training`: This parameter allows you to exclude certain charts from anomaly detection by defining them here. By default, all charts, apart from a specific allow list of the typical basic Netdata charts, are excluded. If you have additional charts you would like to include for anomaly detection, you can add them here. **Note**: It is recommended to add charts in small groups and then measure any impact on performance before adding additional ones. - -## Charts - -Once enabled, the "Anomaly Detection" menu and charts will be available on the dashboard. - - - -In terms of anomaly detection, the most interesting charts would be the `anomaly_detection.dimensions` and `anomaly_detection.anomaly_rate` ones, which hold the `anomalous` and `anomaly_rate` dimensions that show the overall number of dimensions considered anomalous at any time and the corresponding anomaly rate. - -- `anomaly_detection.dimensions`: Total count of dimensions considered anomalous or normal. -- `anomaly_detection.dimensions`: Percentage of anomalous dimensions. -- `anomaly_detection.detector_window`: The length of the active window used by the detector. -- `anomaly_detection.detector_events`: Flags (0 or 1) to show when an anomaly event has been triggered by the detector. -- `anomaly_detection.prediction_stats`: Diagnostic metrics relating to prediction time of anomaly detection. -- `anomaly_detection.training_stats`: Diagnostic metrics relating to training time of anomaly detection. - -Below is an example of how these charts may look in the presence of an anomaly event. - -Initially we see a jump in `anomalous` dimensions: - - - -And a corresponding jump in the `anomaly_rate`: - - - -After a short while the rolling node anomaly rate goes `above_threshold`, and once it stays above threshold for long enough a `new_anomaly_event` is created: - - - -## Glossary - -#### _feature vector_ +The fundamentals of Netdata's anomaly detection approach and implmentation are covered in lots more detail in the [agent ML documentation](https://learn.netdata.cloud/docs/agent/ml). -A [feature vector](https://en.wikipedia.org/wiki/Feature_(machine_learning)) is what the ML model is trained on and uses for prediction. The most simple feature vector would be just the latest raw dimension value itself [x]. By default Netdata will use a feature vector consisting of the 6 latest differences and smoothed values of the dimension so conceptually something like `[avg3(diff1(x-5)), avg3(diff1(x-4)), avg3(diff1(x-3)), avg3(diff1(x-2)), avg3(diff1(x-1)), avg3(diff1(x))]` which ends up being just 6 floating point numbers that try and represent the "shape" of recent data. +This guide will explain how to get started using these ML based anomaly detection capabilities within Netdata. -#### _anomaly score_ +## Anomaly Advisor -At prediction time the anomaly score is just the distance of the most recent feature vector to the trained cluster centers of the model, which are themselves just feature vectors, albeit supposedly the best most representative feature vectors that could be "learned" from the training data. So if the most recent feature vector is very far away in terms of [euclidean distance](https://en.wikipedia.org/wiki/Euclidean_distance#:~:text=In%20mathematics%2C%20the%20Euclidean%20distance,being%20called%20the%20Pythagorean%20distance.) it's more likely that the recent data it represents consists of some strange pattern not commonly found in the training data. +The [Anomaly Advisor](https://learn.netdata.cloud/docs/cloud/insights/anomaly-advisor) is the flagship anomaly detection feature within Netdata. In the "Anomalies" tab of Netdata you will see an overall "Anomaly Rate" chart that aggregates node level anomaly rate for all nodes in a space. The aim of this chart is to make it easy to quickly spot periods of time where the overall "[node anomaly rate](https://learn.netdata.cloud/docs/agent/ml#node-anomaly-rate)" is evelated in some unusual way and for what node or nodes this relates to. -#### _anomaly bit_ + -If the anomaly score is greater than a specified threshold then the most recent feature vector, and hence most recent raw data, is considered anomalous. Since storing the raw anomaly score would essentially double amount of storage space Netdata would need, we instead efficiently store just the anomaly bit in the existing internal Netdata data representation without any additional storage overhead. +Once an area on the Anomaly Rate chart is highlighted netdata will append a "heatmap" to the bottom of the screen that shows which metrics were more anomalous in the highlighted timeframe. Each row in the heatmap consists of an anomaly rate sparkline graph that can be expanded to reveal the raw underlying metric chart for that dimension. -#### _anomaly rate_ + -An anomaly rate is really just an average over one or more anomaly bits. An anomaly rate can be calculated over time for one or more dimensions or at a point in time across multiple dimensions, or some combination of the two. Its just an average of some collection of anomaly bits. +## Embedded Anomaly Rate Charts -#### _anomaly detector_ +Charts in both the [Overview](https://learn.netdata.cloud/docs/cloud/visualize/overview) and [single node dashboard](https://learn.netdata.cloud/docs/cloud/visualize/overview#jump-to-single-node-dashboards) tabs also expose the underlying anomaly rates for each dimension so users can easily see if the raw metrics are considered anomalous or not by Netdata. -The is essentially business logic that just tries to process a collection of anomaly bits to determine if there is enough active anomaly bits to merit investigation or declaration of a node level anomaly event. +Pressing the anomalies icon (next to the information icon in the chart header) will expand the anomaly rate chart to make it easy to see how the anomaly rate for any individual dimension corresponds to the raw underlying data. In the example below we can see that the spike in `system.pgpgio|in` corresponded in the anomaly rate for that dimension jumping to 100% for a small period of time until the spike passed. -#### _anomaly event_ + -Anomaly events are triggered by the anomaly detector and represent a window of time on the node with sufficiently elevated anomaly rates across all dimensions. +## Anomaly Rate Based Alerts -#### _dimension anomaly rate_ +It is possible to use the `anomaly-bit` when defining traditional Alerts within netdata. The `anomaly-bit` is just another `options` parameter that can be passed as part of an [alarm line lookup](https://learn.netdata.cloud/docs/agent/health/reference#alarm-line-lookup). -The anomaly rate of a specific dimension over some window of time. +You can see some example ML based alert configurations below: -#### _node anomaly rate_ +- [Anomaly rate based CPU dimensions alarm](https://learn.netdata.cloud/docs/agent/health/reference#example-8---anomaly-rate-based-cpu-dimensions-alarm) +- [Anomaly rate based CPU chart alarm](https://learn.netdata.cloud/docs/agent/health/reference#example-9---anomaly-rate-based-cpu-chart-alarm) +- [Anomaly rate based node level alarm](https://learn.netdata.cloud/docs/agent/health/reference#example-10---anomaly-rate-based-node-level-alarm) +- More examples in the [`/health/health.d/ml.conf`](https://github.com/netdata/netdata/blob/master/health/health.d/ml.conf) file that ships with the agent. -The anomaly rate across all dimensions of a node. +## Learn More -## Notes +Check out the resources below to learn more about how Netdata is approaching ML: -- We would love to hear any feedback relating to this functionality, please email us at analytics-ml-team@netdata.cloud or come join us in the [🤖-ml-powered-monitoring](https://discord.gg/4eRSEUpJnc) channel of the Netdata discord. -- We are working on additional UI/UX based features that build on these core components to make them as useful as possible out of the box. -- Although not yet a core focus of this work, users could leverage the `anomaly_detection` chart dimensions and/or `anomaly-bit` options in defining alarms based on ML driven anomaly detection models. -- [This presentation](https://docs.google.com/presentation/d/18zkCvU3nKP-Bw_nQZuXTEa4PIVM6wppH3VUnAauq-RU/edit?usp=sharing) walks through some of the main concepts covered above in a more informal way. -- After restart Netdata will wait until `minimum num samples to train` observations of data are available before starting training and prediction. -- Netdata uses [dlib](https://github.com/davisking/dlib) under the hood for its core ML features. -- You should benchmark Netdata resource usage before and after enabling ML. Typical overhead ranges from 1-2% additional CPU at most. -- The "anomaly bit" has been implemented to be a building block to underpin many more ML based use cases that we plan to deliver soon. -- At its core Netdata uses an approach and problem formulation very similar to the Netdata python [anomalies collector](https://learn.netdata.cloud/docs/agent/collectors/python.d.plugin/anomalies), just implemented in a much much more efficient and scalable way in the agent in c++. So if you would like to learn more about the approach and are familiar with Python that is a useful resource to explore, as is the corresponding [deep dive tutorial](https://nbviewer.org/github/netdata/community/blob/main/netdata-agent-api/netdata-pandas/anomalies_collector_deepdive.ipynb) where the default model used is PCA instead of K-Means but the overall approach and formulation is similar.

\ No newline at end of file +- [Agent ML documentation](https://learn.netdata.cloud/docs/agent/ml). +- [Anomaly Advisor documentation](https://learn.netdata.cloud/docs/cloud/insights/anomaly-advisor). +- [Metric Correlations documentation](https://learn.netdata.cloud/docs/cloud/insights/metric-correlations). +- Anomaly Advisor [launch blog post](https://www.netdata.cloud/blog/introducing-anomaly-advisor-unsupervised-anomaly-detection-in-netdata/). +- Netdata Approach to ML [blog post](https://www.netdata.cloud/blog/our-approach-to-machine-learning/). +- `areal/ml` related [GitHub Discussions](https://github.com/netdata/netdata/discussions?discussions_q=label%3Aarea%2Fml). +- Netdata Machine Learning Meetup [deck](https://docs.google.com/presentation/d/1rfSxktg2av2k-eMwMbjN0tXeo76KC33iBaxerYinovs/edit?usp=sharing) and [YouTube recording](https://www.youtube.com/watch?v=eJGWZHVQdNU). +- Netdata Anomaly Advisor [YouTube Playlist](https://youtube.com/playlist?list=PL-P-gAHfL2KPeUcCKmNHXC-LX-FfdO43j). diff --git a/docs/guides/troubleshoot/troubleshooting-agent-with-cloud-connection.md b/docs/guides/troubleshoot/troubleshooting-agent-with-cloud-connection.md new file mode 100644 index 000000000..ee214c814 --- /dev/null +++ b/docs/guides/troubleshoot/troubleshooting-agent-with-cloud-connection.md @@ -0,0 +1,117 @@ +<!-- +title: "Troubleshoot Agent-Cloud connectivity issues" +description: "A simple guide to troubleshoot occurrences where the Agent is showing as offline after claiming." +custom_edit_url: https://github.com/netdata/netdata/edit/master/guides/troubleshoot/troubleshooting-agent-with-cloud-connection.md +--> + +# Troubleshoot Agent-Cloud connectivity issues + +When you are claiming a node, you might not be able to immediately see it online in Netdata Cloud. +This could be due to an error in the claiming process or a temporary outage of some services. + +We identified some scenarios that might cause this delay and possible actions you could take to overcome each situation. + +The most common explanation for the delay usually falls into one of the following three categories: + +- [The claiming process of the kickstart script was unsuccessful](#the-claiming-process-of-the-kickstart-script-was-unsuccessful) +- [Claiming on an older, deprecated version of the Agent](#claiming-on-an-older-deprecated-version-of-the-agent) +- [Network issues while connecting to the Cloud](#network-issues-while-connecting-to-the-cloud) + +## The claiming process of the kickstart script was unsuccessful + +Here, we will try to define some edge cases you might encounter when claiming a node. + +### The kickstart script auto-claimed the Agent but there was no error message displayed + +The kickstart script will install/update your Agent and then try to claim the node to the Cloud (if tokens are provided). To +complete the second part, the Agent must be running. In some platforms, the Netdata service cannot be enabled by default +and you must do it manually, using the following steps: + +1. Check if the Agent is running: + + ```bash + systemctl status netdata + ``` + + The expected output should contain info like this: + + ```bash + Active: active (running) since Wed 2022-07-06 12:25:02 EEST; 1h 40min ago + ``` + +2. Enable and start the Netdata Service. + + ```bash + systemctl enable netdata + systemctl start netdata + ``` + +3. Retry the kickstart claiming process. + +:::note + +In some cases a simple restart of the Agent can fix the issue. +Read more about [Starting, Stopping and Restarting the Agent](/docs/configure/start-stop-restart.md). + +::: + +## Claiming on an older, deprecated version of the Agent + +Make sure that you are using the latest version of Netdata if you are using the [Claiming script](https://learn.netdata.cloud/docs/agent/claim#claiming-script). + +With the introduction of our new architecture, Agents running versions lower than `v1.32.0` can face claiming problems, so we recommend you [update the Netdata Agent](https://learn.netdata.cloud/docs/agent/packaging/installer/update) to the latest stable version. + +## Network issues while connecting to the Cloud + +### Verify that your IP is whitelisted from Netdata Cloud + +Most of the nodes change IPs dynamically. It is possible that your current IP has been restricted from accessing `app.netdata.cloud` due to security concerns. + +To verify this: + +1. Check the Agent's `aclk-state`. + + ```bash + sudo netdatacli aclk-state | grep "Banned By Cloud" + ``` + + The output will contain a line indicating if the IP is banned from `app.netdata.cloud`: + + ```bash + Banned By Cloud: yes + ``` + +2. If your node's IP is banned, you can: + + - Contact our team to whitelist your IP by submitting a ticket in the [Netdata forum](https://community.netdata.cloud/) + - Change your node's IP + +### Make sure that your node has internet connectivity and can resolve network domains + +1. Try to reach a well known host: + + ```bash + ping 8.8.8.8 + ``` + +2. If you can reach external IPs, then check your domain resolution. + + ```bash + host app.netdata.cloud + ``` + + The expected output should be something like this: + + ```bash + app.netdata.cloud is an alias for main-ingress-545609a41fcaf5d6.elb.us-east-1.amazonaws.com. + main-ingress-545609a41fcaf5d6.elb.us-east-1.amazonaws.com has address 54.198.178.11 + main-ingress-545609a41fcaf5d6.elb.us-east-1.amazonaws.com has address 44.207.131.212 + main-ingress-545609a41fcaf5d6.elb.us-east-1.amazonaws.com has address 44.196.50.41 + ``` + + :::info + + There will be cases in which the firewall restricts network access. In those cases, you need to whitelist the `app.netdata.cloud` domain to be able to see your nodes in Netdata Cloud. + If you can't whitelist domains in your firewall, you can whitelist the IPs that the above command will produce, but keep in mind that they can change without any notice. + + ::: |