diff options

| author | Daniel Baumann <daniel.baumann@progress-linux.org> | 2023-10-17 09:30:20 +0000 |

|---|---|---|

| committer | Daniel Baumann <daniel.baumann@progress-linux.org> | 2023-10-17 09:30:20 +0000 |

| commit | 386ccdd61e8256c8b21ee27ee2fc12438fc5ca98 (patch) | |

| tree | c9fbcacdb01f029f46133a5ba7ecd610c2bcb041 /health | |

| parent | Adding upstream version 1.42.4. (diff) | |

| download | netdata-386ccdd61e8256c8b21ee27ee2fc12438fc5ca98.tar.xz netdata-386ccdd61e8256c8b21ee27ee2fc12438fc5ca98.zip | |

Adding upstream version 1.43.0.upstream/1.43.0

Signed-off-by: Daniel Baumann <daniel.baumann@progress-linux.org>

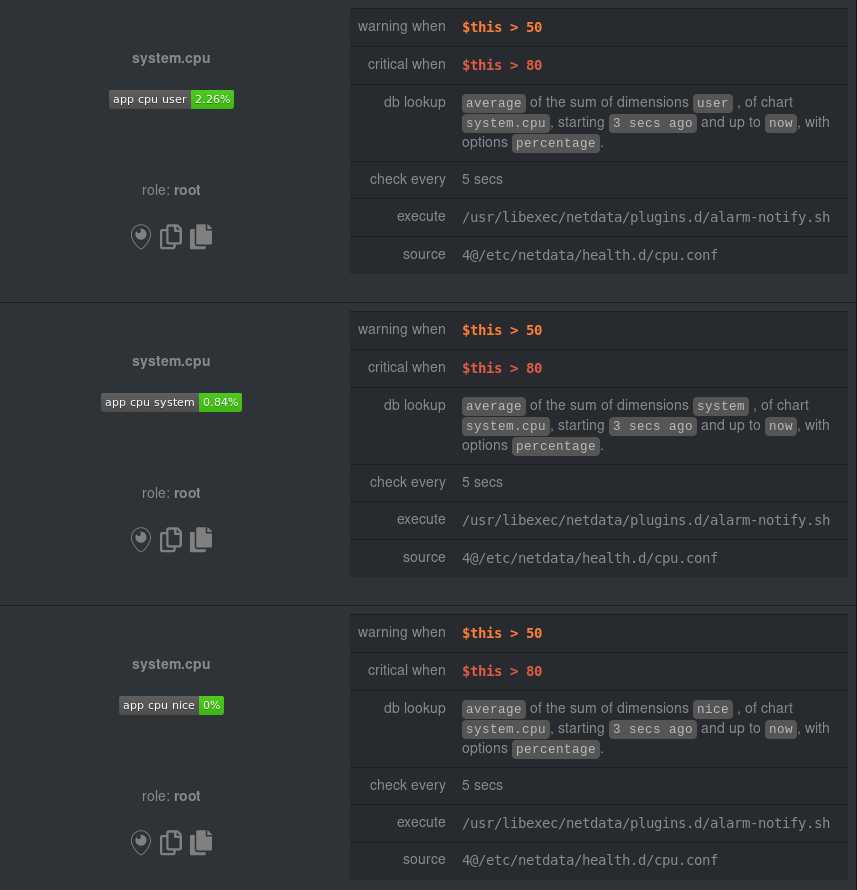

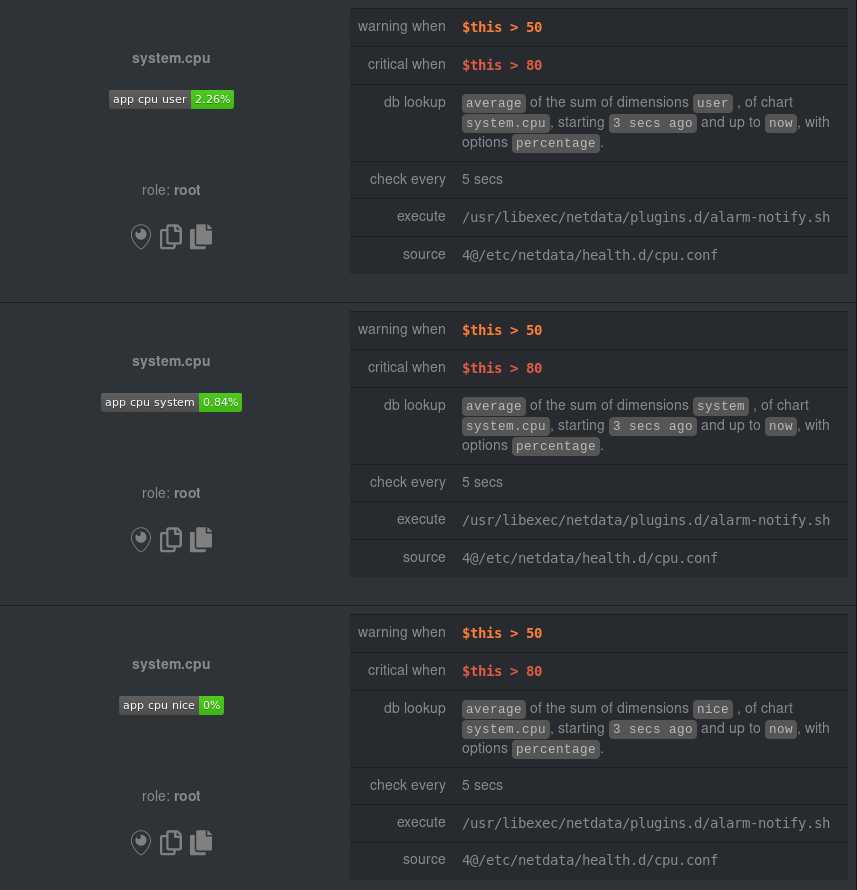

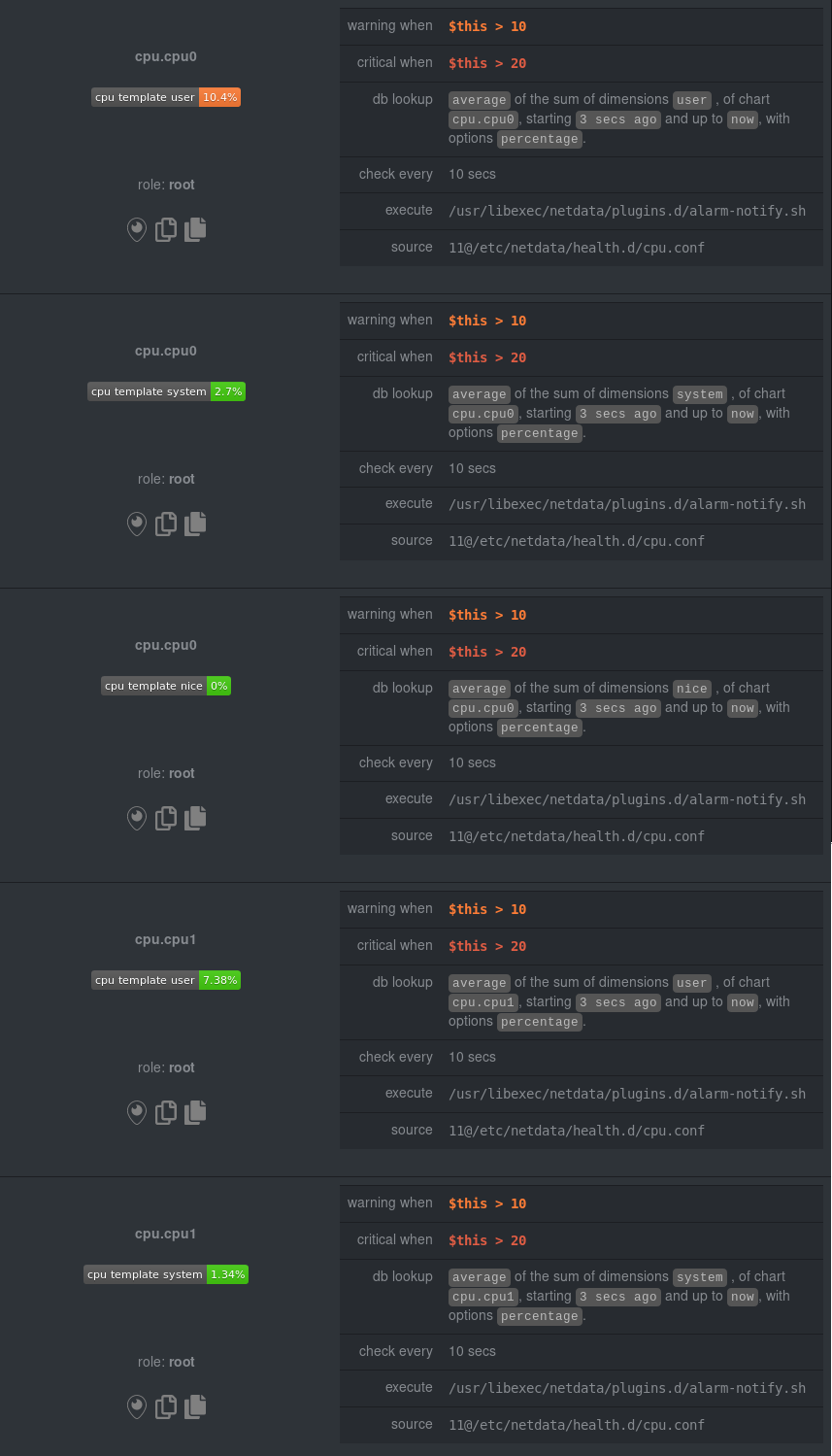

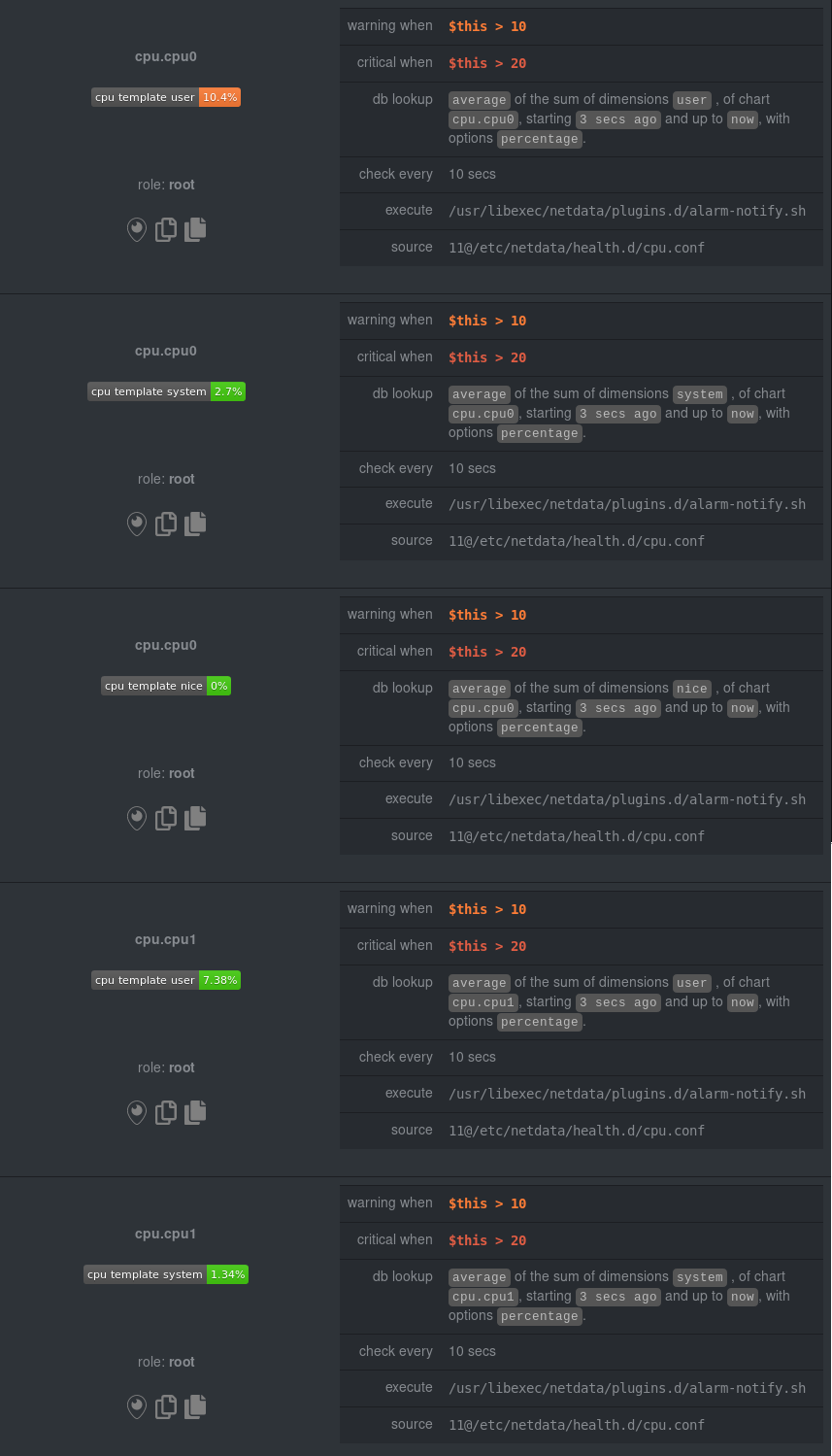

Diffstat (limited to 'health')

121 files changed, 3504 insertions, 2395 deletions