diff options

Diffstat (limited to 'collectors/python.d.plugin')

102 files changed, 9034 insertions, 4307 deletions

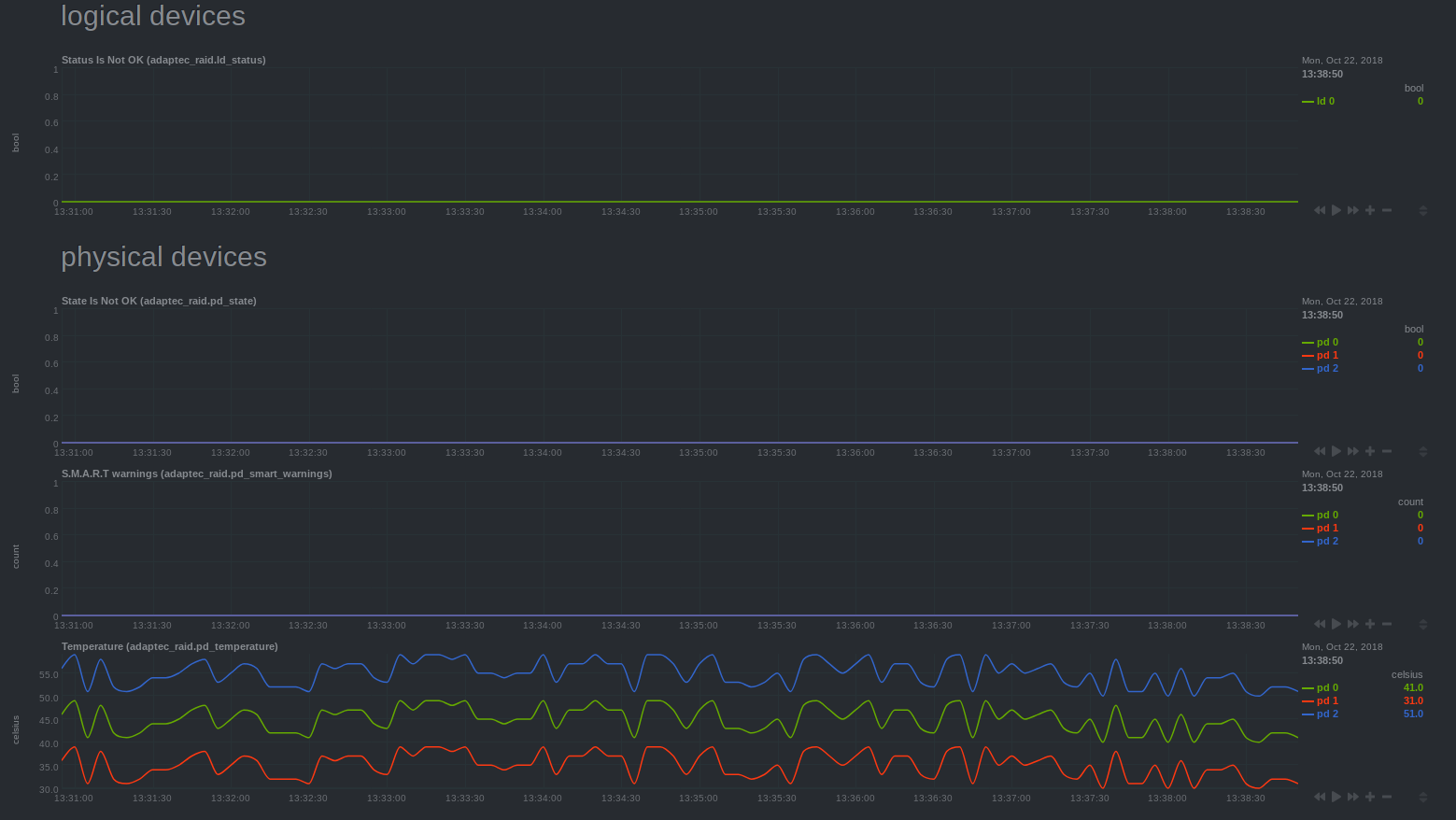

diff --git a/collectors/python.d.plugin/adaptec_raid/README.md b/collectors/python.d.plugin/adaptec_raid/README.md index 41d5b62e0..97a103eb9 100644..120000 --- a/collectors/python.d.plugin/adaptec_raid/README.md +++ b/collectors/python.d.plugin/adaptec_raid/README.md @@ -1,103 +1 @@ -<!-- -title: "Adaptec RAID controller monitoring with Netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/adaptec_raid/README.md" -sidebar_label: "Adaptec RAID" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Hardware" ---> - -# Adaptec RAID controller collector - -Collects logical and physical devices metrics using `arcconf` command-line utility. - -Executed commands: - -- `sudo -n arcconf GETCONFIG 1 LD` -- `sudo -n arcconf GETCONFIG 1 PD` - -## Requirements - -The module uses `arcconf`, which can only be executed by `root`. It uses -`sudo` and assumes that it is configured such that the `netdata` user can execute `arcconf` as root without a password. - -- Add to your `/etc/sudoers` file: - -`which arcconf` shows the full path to the binary. - -```bash -netdata ALL=(root) NOPASSWD: /path/to/arcconf -``` - -- Reset Netdata's systemd - unit [CapabilityBoundingSet](https://www.freedesktop.org/software/systemd/man/systemd.exec.html#Capabilities) (Linux - distributions with systemd) - -The default CapabilityBoundingSet doesn't allow using `sudo`, and is quite strict in general. Resetting is not optimal, but a next-best solution given the inability to execute `arcconf` using `sudo`. - - -As the `root` user, do the following: - -```cmd -mkdir /etc/systemd/system/netdata.service.d -echo -e '[Service]\nCapabilityBoundingSet=~' | tee /etc/systemd/system/netdata.service.d/unset-capability-bounding-set.conf -systemctl daemon-reload -systemctl restart netdata.service -``` - -## Charts - -- Logical Device Status -- Physical Device State -- Physical Device S.M.A.R.T warnings -- Physical Device Temperature - -## Enable the collector - -The `adaptec_raid` collector is disabled by default. To enable it, use `edit-config` from the -Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`, to edit the `python.d.conf` -file. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d.conf -``` - -Change the value of the `adaptec_raid` setting to `yes`. Save the file and restart the Netdata Agent with `sudo -systemctl restart netdata`, or the [appropriate method](https://github.com/netdata/netdata/blob/master/docs/configure/start-stop-restart.md) for your system. - -## Configuration - -Edit the `python.d/adaptec_raid.conf` configuration file using `edit-config` from the -Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/adaptec_raid.conf -``` - - - - - - -### Troubleshooting - -To troubleshoot issues with the `adaptec_raid` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `adaptec_raid` module in debug mode: - -```bash -./python.d.plugin adaptec_raid debug trace -``` - +integrations/adaptecraid.md

\ No newline at end of file diff --git a/collectors/python.d.plugin/adaptec_raid/integrations/adaptecraid.md b/collectors/python.d.plugin/adaptec_raid/integrations/adaptecraid.md new file mode 100644 index 000000000..59e359d0d --- /dev/null +++ b/collectors/python.d.plugin/adaptec_raid/integrations/adaptecraid.md @@ -0,0 +1,203 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/adaptec_raid/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/adaptec_raid/metadata.yaml" +sidebar_label: "AdaptecRAID" +learn_status: "Published" +learn_rel_path: "Data Collection/Storage, Mount Points and Filesystems" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# AdaptecRAID + + +<img src="https://netdata.cloud/img/adaptec.svg" width="150"/> + + +Plugin: python.d.plugin +Module: adaptec_raid + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +This collector monitors Adaptec RAID hardware storage controller metrics about both physical and logical drives. + + +It uses the arcconf command line utility (from adaptec) to monitor your raid controller. + +Executed commands: + - `sudo -n arcconf GETCONFIG 1 LD` + - `sudo -n arcconf GETCONFIG 1 PD` + + +This collector is supported on all platforms. + +This collector only supports collecting metrics from a single instance of this integration. + +The module uses arcconf, which can only be executed by root. It uses sudo and assumes that it is configured such that the netdata user can execute arcconf as root without a password. + +### Default Behavior + +#### Auto-Detection + +After all the permissions are satisfied, netdata should be to execute commands via the arcconf command line utility + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per AdaptecRAID instance + +These metrics refer to the entire monitored application. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| adaptec_raid.ld_status | a dimension per logical device | bool | +| adaptec_raid.pd_state | a dimension per physical device | bool | +| adaptec_raid.smart_warnings | a dimension per physical device | count | +| adaptec_raid.temperature | a dimension per physical device | celsius | + + + +## Alerts + + +The following alerts are available: + +| Alert name | On metric | Description | +|:------------|:----------|:------------| +| [ adaptec_raid_ld_status ](https://github.com/netdata/netdata/blob/master/health/health.d/adaptec_raid.conf) | adaptec_raid.ld_status | logical device status is failed or degraded | +| [ adaptec_raid_pd_state ](https://github.com/netdata/netdata/blob/master/health/health.d/adaptec_raid.conf) | adaptec_raid.pd_state | physical device state is not online | + + +## Setup + +### Prerequisites + +#### Grant permissions for netdata, to run arcconf as sudoer + +The module uses arcconf, which can only be executed by root. It uses sudo and assumes that it is configured such that the netdata user can execute arcconf as root without a password. + +Add to your /etc/sudoers file: +which arcconf shows the full path to the binary. + +```bash +netdata ALL=(root) NOPASSWD: /path/to/arcconf +``` + + +#### Reset Netdata's systemd unit CapabilityBoundingSet (Linux distributions with systemd) + +The default CapabilityBoundingSet doesn't allow using sudo, and is quite strict in general. Resetting is not optimal, but a next-best solution given the inability to execute arcconf using sudo. + +As root user, do the following: + +```bash +mkdir /etc/systemd/system/netdata.service.d +echo -e '[Service]\nCapabilityBoundingSet=~' | tee /etc/systemd/system/netdata.service.d/unset-capability-bounding-set.conf +systemctl daemon-reload +systemctl restart netdata.service +``` + + + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/adaptec_raid.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/adaptec_raid.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| update_every | Sets the default data collection frequency. | 5 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | + +</details> + +#### Examples + +##### Basic + +A basic example configuration per job + +```yaml +job_name: + name: my_job_name + update_every: 1 # the JOB's data collection frequency + priority: 60000 # the JOB's order on the dashboard + penalty: yes # the JOB's penalty + autodetection_retry: 0 # the JOB's re-check interval in seconds + +``` + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `adaptec_raid` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin adaptec_raid debug trace + ``` + + diff --git a/collectors/python.d.plugin/adaptec_raid/metadata.yaml b/collectors/python.d.plugin/adaptec_raid/metadata.yaml index 7ee4ce7c2..c69baff4a 100644 --- a/collectors/python.d.plugin/adaptec_raid/metadata.yaml +++ b/collectors/python.d.plugin/adaptec_raid/metadata.yaml @@ -27,8 +27,8 @@ modules: It uses the arcconf command line utility (from adaptec) to monitor your raid controller. Executed commands: - - sudo -n arcconf GETCONFIG 1 LD - - sudo -n arcconf GETCONFIG 1 PD + - `sudo -n arcconf GETCONFIG 1 LD` + - `sudo -n arcconf GETCONFIG 1 PD` supported_platforms: include: [] exclude: [] diff --git a/collectors/python.d.plugin/alarms/README.md b/collectors/python.d.plugin/alarms/README.md index 0f956b291..85759ae6c 100644..120000 --- a/collectors/python.d.plugin/alarms/README.md +++ b/collectors/python.d.plugin/alarms/README.md @@ -1,89 +1 @@ -<!-- -title: "Alarms" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/alarms/README.md" -sidebar_label: "Alarms" -learn_status: "Published" -learn_rel_path: "Integrations/Monitor/Netdata" ---> - -# Alarms - -This collector creates an 'Alarms' menu with one line plot showing alarm states over time. Alarm states are mapped to integer values according to the below default mapping. Any alarm status types not in this mapping will be ignored (Note: This mapping can be changed by editing the `status_map` in the `alarms.conf` file). If you would like to learn more about the different alarm statuses check out the docs [here](https://github.com/netdata/netdata/blob/master/health/REFERENCE.md#alarm-statuses). - -``` -{ - 'CLEAR': 0, - 'WARNING': 1, - 'CRITICAL': 2 -} -``` - -## Charts - -Below is an example of the chart produced when running `stress-ng --all 2` for a few minutes. You can see the various warning and critical alarms raised. - - - -## Configuration - -Enable the collector and [restart Netdata](https://github.com/netdata/netdata/blob/master/docs/configure/start-stop-restart.md). - -```bash -cd /etc/netdata/ -sudo ./edit-config python.d.conf -# Set `alarms: no` to `alarms: yes` -sudo systemctl restart netdata -``` - -If needed, edit the `python.d/alarms.conf` configuration file using `edit-config` from the your agent's [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is usually at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/alarms.conf -``` - -The `alarms` specific part of the `alarms.conf` file should look like this: - -```yaml -# what url to pull data from -local: - url: 'http://127.0.0.1:19999/api/v1/alarms?all' - # define how to map alarm status to numbers for the chart - status_map: - CLEAR: 0 - WARNING: 1 - CRITICAL: 2 - # set to true to include a chart with calculated alarm values over time - collect_alarm_values: false - # define the type of chart for plotting status over time e.g. 'line' or 'stacked' - alarm_status_chart_type: 'line' - # a "," separated list of words you want to filter alarm names for. For example 'cpu,load' would filter for only - # alarms with "cpu" or "load" in alarm name. Default includes all. - alarm_contains_words: '' - # a "," separated list of words you want to exclude based on alarm name. For example 'cpu,load' would exclude - # all alarms with "cpu" or "load" in alarm name. Default excludes None. - alarm_excludes_words: '' -``` - -It will default to pulling all alarms at each time step from the Netdata rest api at `http://127.0.0.1:19999/api/v1/alarms?all` -### Troubleshooting - -To troubleshoot issues with the `alarms` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `alarms` module in debug mode: - -```bash -./python.d.plugin alarms debug trace -``` - +integrations/netdata_agent_alarms.md

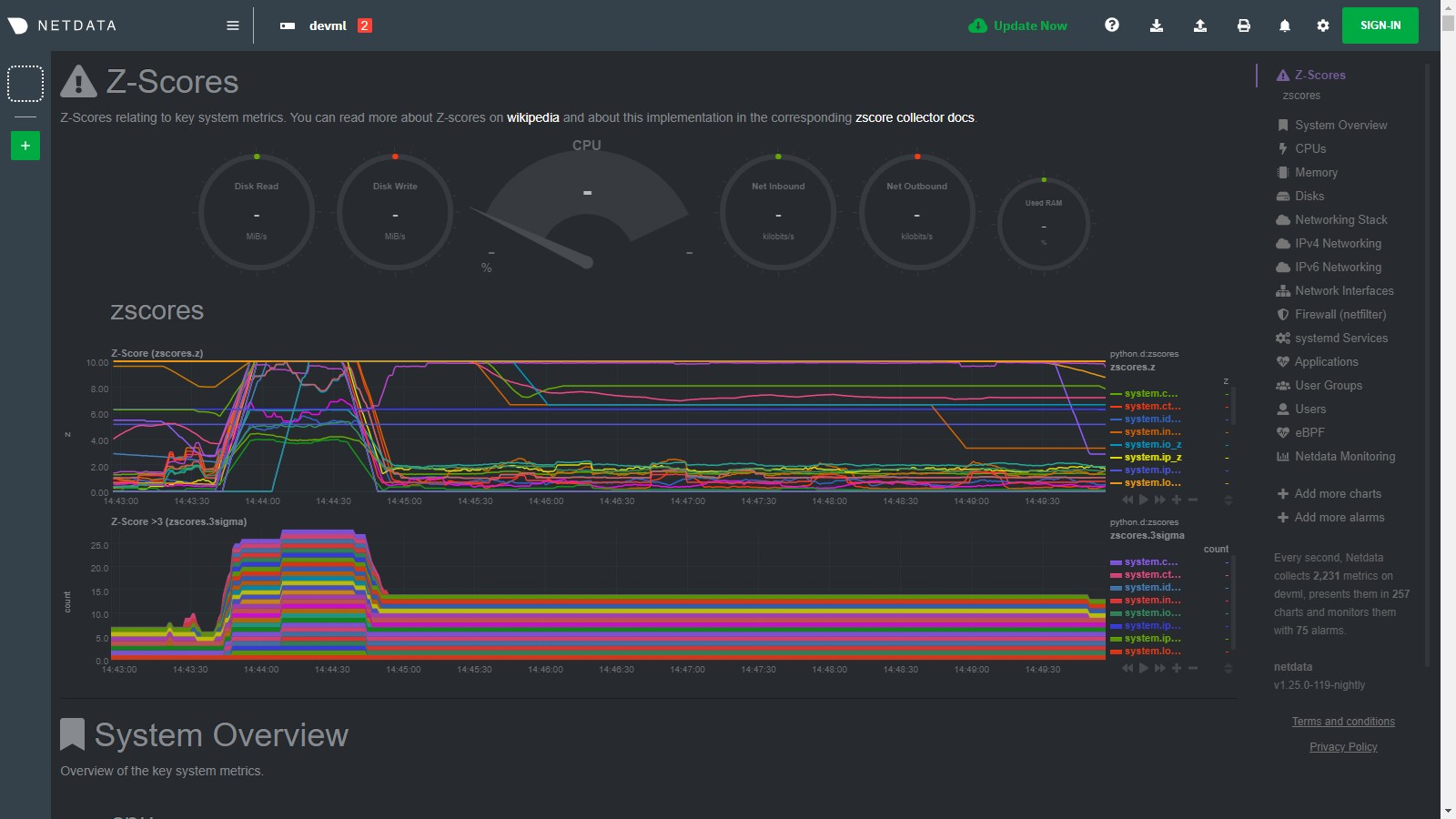

\ No newline at end of file diff --git a/collectors/python.d.plugin/alarms/integrations/netdata_agent_alarms.md b/collectors/python.d.plugin/alarms/integrations/netdata_agent_alarms.md new file mode 100644 index 000000000..95e4a4a3b --- /dev/null +++ b/collectors/python.d.plugin/alarms/integrations/netdata_agent_alarms.md @@ -0,0 +1,200 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/alarms/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/alarms/metadata.yaml" +sidebar_label: "Netdata Agent alarms" +learn_status: "Published" +learn_rel_path: "Data Collection/Other" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# Netdata Agent alarms + +Plugin: python.d.plugin +Module: alarms + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +This collector creates an 'Alarms' menu with one line plot of `alarms.status`. + + +Alarm status is read from the Netdata agent rest api [`/api/v1/alarms?all`](https://learn.netdata.cloud/api#/alerts/alerts1). + + +This collector is supported on all platforms. + +This collector supports collecting metrics from multiple instances of this integration, including remote instances. + + +### Default Behavior + +#### Auto-Detection + +It discovers instances of Netdata running on localhost, and gathers metrics from `http://127.0.0.1:19999/api/v1/alarms?all`. `CLEAR` status is mapped to `0`, `WARNING` to `1` and `CRITICAL` to `2`. Also, by default all alarms produced will be monitored. + + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per Netdata Agent alarms instance + +These metrics refer to the entire monitored application. + + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| alarms.status | a dimension per alarm representing the latest status of the alarm. | status | +| alarms.values | a dimension per alarm representing the latest collected value of the alarm. | value | + + + +## Alerts + +There are no alerts configured by default for this integration. + + +## Setup + +### Prerequisites + +No action required. + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/alarms.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/alarms.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| url | Netdata agent alarms endpoint to collect from. Can be local or remote so long as reachable by agent. | http://127.0.0.1:19999/api/v1/alarms?all | True | +| status_map | Mapping of alarm status to integer number that will be the metric value collected. | {"CLEAR": 0, "WARNING": 1, "CRITICAL": 2} | True | +| collect_alarm_values | set to true to include a chart with calculated alarm values over time. | False | True | +| alarm_status_chart_type | define the type of chart for plotting status over time e.g. 'line' or 'stacked'. | line | True | +| alarm_contains_words | A "," separated list of words you want to filter alarm names for. For example 'cpu,load' would filter for only alarms with "cpu" or "load" in alarm name. Default includes all. | | True | +| alarm_excludes_words | A "," separated list of words you want to exclude based on alarm name. For example 'cpu,load' would exclude all alarms with "cpu" or "load" in alarm name. Default excludes None. | | True | +| update_every | Sets the default data collection frequency. | 10 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | +| name | Job name. This value will overwrite the `job_name` value. JOBS with the same name are mutually exclusive. Only one of them will be allowed running at any time. This allows autodetection to try several alternatives and pick the one that works. | | False | + +</details> + +#### Examples + +##### Basic + +A basic example configuration. + +```yaml +jobs: + url: 'http://127.0.0.1:19999/api/v1/alarms?all' + +``` +##### Advanced + +An advanced example configuration with multiple jobs collecting different subsets of alarms for plotting on different charts. +"ML" job will collect status and values for all alarms with "ml_" in the name. Default job will collect status for all other alarms. + + +<details><summary>Config</summary> + +```yaml +ML: + update_every: 5 + url: 'http://127.0.0.1:19999/api/v1/alarms?all' + status_map: + CLEAR: 0 + WARNING: 1 + CRITICAL: 2 + collect_alarm_values: true + alarm_status_chart_type: 'stacked' + alarm_contains_words: 'ml_' + +Default: + update_every: 5 + url: 'http://127.0.0.1:19999/api/v1/alarms?all' + status_map: + CLEAR: 0 + WARNING: 1 + CRITICAL: 2 + collect_alarm_values: false + alarm_status_chart_type: 'stacked' + alarm_excludes_words: 'ml_' + +``` +</details> + + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `alarms` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin alarms debug trace + ``` + + diff --git a/collectors/python.d.plugin/am2320/README.md b/collectors/python.d.plugin/am2320/README.md index b8a6acb0b..0bc5ea90e 100644..120000 --- a/collectors/python.d.plugin/am2320/README.md +++ b/collectors/python.d.plugin/am2320/README.md @@ -1,76 +1 @@ -<!-- -title: "AM2320 sensor monitoring with netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/am2320/README.md" -sidebar_label: "AM2320" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Remotes/Devices" ---> - -# AM2320 sensor monitoring with netdata - -Displays a graph of the temperature and humidity from a AM2320 sensor. - -## Requirements - - Adafruit Circuit Python AM2320 library - - Adafruit AM2320 I2C sensor - - Python 3 (Adafruit libraries are not Python 2.x compatible) - - -It produces the following charts: -1. **Temperature** -2. **Humidity** - -## Configuration - -Edit the `python.d/am2320.conf` configuration file using `edit-config` from the Netdata [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/am2320.conf -``` - -Raspberry Pi Instructions: - -Hardware install: -Connect the am2320 to the Raspberry Pi I2C pins - -Raspberry Pi 3B/4 Pins: - -- Board 3.3V (pin 1) to sensor VIN (pin 1) -- Board SDA (pin 3) to sensor SDA (pin 2) -- Board GND (pin 6) to sensor GND (pin 3) -- Board SCL (pin 5) to sensor SCL (pin 4) - -You may also need to add two I2C pullup resistors if your board does not already have them. The Raspberry Pi does have internal pullup resistors but it doesn't hurt to add them anyway. You can use 2.2K - 10K but we will just use 10K. The resistors go from VDD to SCL and SDA each. - -Software install: -- `sudo pip3 install adafruit-circuitpython-am2320` -- edit `/etc/netdata/netdata.conf` -- find `[plugin:python.d]` -- add `command options = -ppython3` -- save the file. -- restart the netdata service. -- check the dashboard. - -### Troubleshooting - -To troubleshoot issues with the `am2320` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `am2320` module in debug mode: - -```bash -./python.d.plugin am2320 debug trace -``` - +integrations/am2320.md

\ No newline at end of file diff --git a/collectors/python.d.plugin/am2320/integrations/am2320.md b/collectors/python.d.plugin/am2320/integrations/am2320.md new file mode 100644 index 000000000..9b41a8fd6 --- /dev/null +++ b/collectors/python.d.plugin/am2320/integrations/am2320.md @@ -0,0 +1,180 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/am2320/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/am2320/metadata.yaml" +sidebar_label: "AM2320" +learn_status: "Published" +learn_rel_path: "Data Collection/Hardware Devices and Sensors" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# AM2320 + + +<img src="https://netdata.cloud/img/microchip.svg" width="150"/> + + +Plugin: python.d.plugin +Module: am2320 + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +This collector monitors AM2320 sensor metrics about temperature and humidity. + +It retrieves temperature and humidity values by contacting an AM2320 sensor over i2c. + +This collector is supported on all platforms. + +This collector only supports collecting metrics from a single instance of this integration. + + +### Default Behavior + +#### Auto-Detection + +Assuming prerequisites are met, the collector will try to connect to the sensor via i2c + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per AM2320 instance + +These metrics refer to the entire monitored application. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| am2320.temperature | temperature | celsius | +| am2320.humidity | humidity | percentage | + + + +## Alerts + +There are no alerts configured by default for this integration. + + +## Setup + +### Prerequisites + +#### Sensor connection to a Raspberry Pi + +Connect the am2320 to the Raspberry Pi I2C pins + +Raspberry Pi 3B/4 Pins: + +- Board 3.3V (pin 1) to sensor VIN (pin 1) +- Board SDA (pin 3) to sensor SDA (pin 2) +- Board GND (pin 6) to sensor GND (pin 3) +- Board SCL (pin 5) to sensor SCL (pin 4) + +You may also need to add two I2C pullup resistors if your board does not already have them. The Raspberry Pi does have internal pullup resistors but it doesn't hurt to add them anyway. You can use 2.2K - 10K but we will just use 10K. The resistors go from VDD to SCL and SDA each. + + +#### Software requirements + +Install the Adafruit Circuit Python AM2320 library: + +`sudo pip3 install adafruit-circuitpython-am2320` + + + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/am2320.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/am2320.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| update_every | Sets the default data collection frequency. | 5 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | +| name | Job name. This value will overwrite the `job_name` value. JOBS with the same name are mutually exclusive. Only one of them will be allowed running at any time. This allows autodetection to try several alternatives and pick the one that works. | | False | + +</details> + +#### Examples + +##### Local sensor + +A basic JOB configuration + +```yaml +local_sensor: + name: 'Local AM2320' + +``` + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `am2320` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin am2320 debug trace + ``` + + diff --git a/collectors/python.d.plugin/beanstalk/README.md b/collectors/python.d.plugin/beanstalk/README.md index c86ca354a..4efe13889 100644..120000 --- a/collectors/python.d.plugin/beanstalk/README.md +++ b/collectors/python.d.plugin/beanstalk/README.md @@ -1,156 +1 @@ -<!-- -title: "Beanstalk monitoring with Netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/beanstalk/README.md" -sidebar_label: "Beanstalk" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Message brokers" ---> - -# Beanstalk collector - -Provides server and tube-level statistics. - -## Requirements - -- `python-beanstalkc` - -**Server statistics:** - -1. **Cpu usage** in cpu time - - - user - - system - -2. **Jobs rate** in jobs/s - - - total - - timeouts - -3. **Connections rate** in connections/s - - - connections - -4. **Commands rate** in commands/s - - - put - - peek - - peek-ready - - peek-delayed - - peek-buried - - reserve - - use - - watch - - ignore - - delete - - release - - bury - - kick - - stats - - stats-job - - stats-tube - - list-tubes - - list-tube-used - - list-tubes-watched - - pause-tube - -5. **Current tubes** in tubes - - - tubes - -6. **Current jobs** in jobs - - - urgent - - ready - - reserved - - delayed - - buried - -7. **Current connections** in connections - - - written - - producers - - workers - - waiting - -8. **Binlog** in records/s - - - written - - migrated - -9. **Uptime** in seconds - - - uptime - -**Per tube statistics:** - -1. **Jobs rate** in jobs/s - - - jobs - -2. **Jobs** in jobs - - - using - - ready - - reserved - - delayed - - buried - -3. **Connections** in connections - - - using - - waiting - - watching - -4. **Commands** in commands/s - - - deletes - - pauses - -5. **Pause** in seconds - - - since - - left - -## Configuration - -Edit the `python.d/beanstalk.conf` configuration file using `edit-config` from the Netdata [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/beanstalk.conf -``` - -Sample: - -```yaml -host : '127.0.0.1' -port : 11300 -``` - -If no configuration is given, module will attempt to connect to beanstalkd on `127.0.0.1:11300` address - - - - -### Troubleshooting - -To troubleshoot issues with the `beanstalk` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `beanstalk` module in debug mode: - -```bash -./python.d.plugin beanstalk debug trace -``` - +integrations/beanstalk.md

\ No newline at end of file diff --git a/collectors/python.d.plugin/beanstalk/integrations/beanstalk.md b/collectors/python.d.plugin/beanstalk/integrations/beanstalk.md new file mode 100644 index 000000000..cf2f0dac1 --- /dev/null +++ b/collectors/python.d.plugin/beanstalk/integrations/beanstalk.md @@ -0,0 +1,218 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/beanstalk/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/beanstalk/metadata.yaml" +sidebar_label: "Beanstalk" +learn_status: "Published" +learn_rel_path: "Data Collection/Message Brokers" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# Beanstalk + + +<img src="https://netdata.cloud/img/beanstalk.svg" width="150"/> + + +Plugin: python.d.plugin +Module: beanstalk + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +Monitor Beanstalk metrics to enhance job queueing and processing efficiency. Track job rates, processing times, and queue lengths for better task management. + +The collector uses the `beanstalkc` python module to connect to a `beanstalkd` service and gather metrics. + +This collector is supported on all platforms. + +This collector supports collecting metrics from multiple instances of this integration, including remote instances. + + +### Default Behavior + +#### Auto-Detection + +If no configuration is given, module will attempt to connect to beanstalkd on 127.0.0.1:11300 address. + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per Beanstalk instance + +These metrics refer to the entire monitored application. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| beanstalk.cpu_usage | user, system | cpu time | +| beanstalk.jobs_rate | total, timeouts | jobs/s | +| beanstalk.connections_rate | connections | connections/s | +| beanstalk.commands_rate | put, peek, peek-ready, peek-delayed, peek-buried, reserve, use, watch, ignore, delete, bury, kick, stats, stats-job, stats-tube, list-tubes, list-tube-used, list-tubes-watched, pause-tube | commands/s | +| beanstalk.connections_rate | tubes | tubes | +| beanstalk.current_jobs | urgent, ready, reserved, delayed, buried | jobs | +| beanstalk.current_connections | written, producers, workers, waiting | connections | +| beanstalk.binlog | written, migrated | records/s | +| beanstalk.uptime | uptime | seconds | + +### Per tube + +Metrics related to Beanstalk tubes. Each tube produces its own set of the following metrics. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| beanstalk.jobs_rate | jobs | jobs/s | +| beanstalk.jobs | urgent, ready, reserved, delayed, buried | jobs | +| beanstalk.connections | using, waiting, watching | connections | +| beanstalk.commands | deletes, pauses | commands/s | +| beanstalk.pause | since, left | seconds | + + + +## Alerts + + +The following alerts are available: + +| Alert name | On metric | Description | +|:------------|:----------|:------------| +| [ beanstalk_server_buried_jobs ](https://github.com/netdata/netdata/blob/master/health/health.d/beanstalkd.conf) | beanstalk.current_jobs | number of buried jobs across all tubes. You need to manually kick them so they can be processed. Presence of buried jobs in a tube does not affect new jobs. | + + +## Setup + +### Prerequisites + +#### beanstalkc python module + +The collector requires the `beanstalkc` python module to be installed. + + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/beanstalk.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/beanstalk.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| update_every | Sets the default data collection frequency. | 5 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | +| name | Job name. This value will overwrite the `job_name` value. JOBS with the same name are mutually exclusive. Only one of them will be allowed running at any time. This allows autodetection to try several alternatives and pick the one that works. | | False | +| host | IP or URL to a beanstalk service. | 127.0.0.1 | False | +| port | Port to the IP or URL to a beanstalk service. | 11300 | False | + +</details> + +#### Examples + +##### Remote beanstalk server + +A basic remote beanstalk server + +```yaml +remote: + name: 'beanstalk' + host: '1.2.3.4' + port: 11300 + +``` +##### Multi-instance + +> **Note**: When you define multiple jobs, their names must be unique. + +Collecting metrics from local and remote instances. + + +<details><summary>Config</summary> + +```yaml +localhost: + name: 'local_beanstalk' + host: '127.0.0.1' + port: 11300 + +remote_job: + name: 'remote_beanstalk' + host: '192.0.2.1' + port: 113000 + +``` +</details> + + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `beanstalk` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin beanstalk debug trace + ``` + + diff --git a/collectors/python.d.plugin/beanstalk/metadata.yaml b/collectors/python.d.plugin/beanstalk/metadata.yaml index b6ff2f116..7dff9cb3a 100644 --- a/collectors/python.d.plugin/beanstalk/metadata.yaml +++ b/collectors/python.d.plugin/beanstalk/metadata.yaml @@ -8,7 +8,7 @@ modules: link: "https://beanstalkd.github.io/" categories: - data-collection.message-brokers - - data-collection.task-queues + #- data-collection.task-queues icon_filename: "beanstalk.svg" related_resources: integrations: diff --git a/collectors/python.d.plugin/bind_rndc/README.md b/collectors/python.d.plugin/bind_rndc/README.md index aa173f385..03a182ae8 100644..120000 --- a/collectors/python.d.plugin/bind_rndc/README.md +++ b/collectors/python.d.plugin/bind_rndc/README.md @@ -1,102 +1 @@ -<!-- -title: "ISC Bind monitoring with Netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/bind_rndc/README.md" -sidebar_label: "ISC Bind" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Webapps" ---> - -# ISC Bind collector - -Collects Name server summary performance statistics using `rndc` tool. - -## Requirements - -- Version of bind must be 9.6 + -- Netdata must have permissions to run `rndc stats` - -It produces: - -1. **Name server statistics** - - - requests - - responses - - success - - auth_answer - - nonauth_answer - - nxrrset - - failure - - nxdomain - - recursion - - duplicate - - rejections - -2. **Incoming queries** - - - RESERVED0 - - A - - NS - - CNAME - - SOA - - PTR - - MX - - TXT - - X25 - - AAAA - - SRV - - NAPTR - - A6 - - DS - - RSIG - - DNSKEY - - SPF - - ANY - - DLV - -3. **Outgoing queries** - -- Same as Incoming queries - -## Configuration - -Edit the `python.d/bind_rndc.conf` configuration file using `edit-config` from the Netdata [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/bind_rndc.conf -``` - -Sample: - -```yaml -local: - named_stats_path : '/var/log/bind/named.stats' -``` - -If no configuration is given, module will attempt to read named.stats file at `/var/log/bind/named.stats` - - - - -### Troubleshooting - -To troubleshoot issues with the `bind_rndc` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `bind_rndc` module in debug mode: - -```bash -./python.d.plugin bind_rndc debug trace -``` - +integrations/isc_bind_rndc.md

\ No newline at end of file diff --git a/collectors/python.d.plugin/bind_rndc/integrations/isc_bind_rndc.md b/collectors/python.d.plugin/bind_rndc/integrations/isc_bind_rndc.md new file mode 100644 index 000000000..cc847272d --- /dev/null +++ b/collectors/python.d.plugin/bind_rndc/integrations/isc_bind_rndc.md @@ -0,0 +1,214 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/bind_rndc/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/bind_rndc/metadata.yaml" +sidebar_label: "ISC Bind (RNDC)" +learn_status: "Published" +learn_rel_path: "Data Collection/DNS and DHCP Servers" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# ISC Bind (RNDC) + + +<img src="https://netdata.cloud/img/isc.png" width="150"/> + + +Plugin: python.d.plugin +Module: bind_rndc + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +Monitor ISCBind (RNDC) performance for optimal DNS server operations. Monitor query rates, response times, and error rates to ensure reliable DNS service delivery. + +This collector uses the `rndc` tool to dump (named.stats) statistics then read them to gather Bind Name Server summary performance metrics. + +This collector is supported on all platforms. + +This collector only supports collecting metrics from a single instance of this integration. + + +### Default Behavior + +#### Auto-Detection + +If no configuration is given, the collector will attempt to read named.stats file at `/var/log/bind/named.stats` + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per ISC Bind (RNDC) instance + +These metrics refer to the entire monitored application. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| bind_rndc.name_server_statistics | requests, rejected_queries, success, failure, responses, duplicate, recursion, nxrrset, nxdomain, non_auth_answer, auth_answer, dropped_queries | stats | +| bind_rndc.incoming_queries | a dimension per incoming query type | queries | +| bind_rndc.outgoing_queries | a dimension per outgoing query type | queries | +| bind_rndc.stats_size | stats_size | MiB | + + + +## Alerts + + +The following alerts are available: + +| Alert name | On metric | Description | +|:------------|:----------|:------------| +| [ bind_rndc_stats_file_size ](https://github.com/netdata/netdata/blob/master/health/health.d/bind_rndc.conf) | bind_rndc.stats_size | BIND statistics-file size | + + +## Setup + +### Prerequisites + +#### Minimum bind version and permissions + +Version of bind must be >=9.6 and the Netdata user must have permissions to run `rndc stats` + +#### Setup log rotate for bind stats + +BIND appends logs at EVERY RUN. It is NOT RECOMMENDED to set `update_every` below 30 sec. +It is STRONGLY RECOMMENDED to create a `bind-rndc.conf` file for logrotate. + +To set up BIND to dump stats do the following: + +1. Add to 'named.conf.options' options {}: +`statistics-file "/var/log/bind/named.stats";` + +2. Create bind/ directory in /var/log: +`cd /var/log/ && mkdir bind` + +3. Change owner of directory to 'bind' user: +`chown bind bind/` + +4. RELOAD (NOT restart) BIND: +`systemctl reload bind9.service` + +5. Run as a root 'rndc stats' to dump (BIND will create named.stats in new directory) + +To allow Netdata to run 'rndc stats' change '/etc/bind/rndc.key' group to netdata: +`chown :netdata rndc.key` + +Last, BUT NOT least, is to create bind-rndc.conf in logrotate.d/: +``` +/var/log/bind/named.stats { + + daily + rotate 4 + compress + delaycompress + create 0644 bind bind + missingok + postrotate + rndc reload > /dev/null + endscript +} +``` +To test your logrotate conf file run as root: +`logrotate /etc/logrotate.d/bind-rndc -d (debug dry-run mode)` + + + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/bind_rndc.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/bind_rndc.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| update_every | Sets the default data collection frequency. | 5 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | +| name | Job name. This value will overwrite the `job_name` value. JOBS with the same name are mutually exclusive. Only one of them will be allowed running at any time. This allows autodetection to try several alternatives and pick the one that works. | | False | +| named_stats_path | Path to the named stats, after being dumped by `nrdc` | /var/log/bind/named.stats | False | + +</details> + +#### Examples + +##### Local bind stats + +Define a local path to bind stats file + +```yaml +local: + named_stats_path: '/var/log/bind/named.stats' + +``` + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `bind_rndc` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin bind_rndc debug trace + ``` + + diff --git a/collectors/python.d.plugin/bind_rndc/metadata.yaml b/collectors/python.d.plugin/bind_rndc/metadata.yaml index 1e9fb24fe..e3568e448 100644 --- a/collectors/python.d.plugin/bind_rndc/metadata.yaml +++ b/collectors/python.d.plugin/bind_rndc/metadata.yaml @@ -4,7 +4,7 @@ modules: plugin_name: python.d.plugin module_name: bind_rndc monitored_instance: - name: ISCBind (RNDC) + name: ISC Bind (RNDC) link: "https://www.isc.org/bind/" categories: - data-collection.dns-and-dhcp-servers diff --git a/collectors/python.d.plugin/boinc/README.md b/collectors/python.d.plugin/boinc/README.md index ea4397754..22c10ca17 100644..120000 --- a/collectors/python.d.plugin/boinc/README.md +++ b/collectors/python.d.plugin/boinc/README.md @@ -1,64 +1 @@ -<!-- -title: "BOINC monitoring with Netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/boinc/README.md" -sidebar_label: "BOINC" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Distributed computing" ---> - -# BOINC collector - -Monitors task counts for the Berkeley Open Infrastructure Networking Computing (BOINC) distributed computing client using the same RPC interface that the BOINC monitoring GUI does. - -It provides charts tracking the total number of tasks and active tasks, as well as ones tracking each of the possible states for tasks. - -## Configuration - -Edit the `python.d/boinc.conf` configuration file using `edit-config` from the Netdata [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/boinc.conf -``` - -BOINC requires use of a password to access it's RPC interface. You can -find this password in the `gui_rpc_auth.cfg` file in your BOINC directory. - -By default, the module will try to auto-detect the password by looking -in `/var/lib/boinc` for this file (this is the location most Linux -distributions use for a system-wide BOINC installation), so things may -just work without needing configuration for the local system. - -You can monitor remote systems as well: - -```yaml -remote: - hostname: some-host - password: some-password -``` - - - - -### Troubleshooting - -To troubleshoot issues with the `boinc` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `boinc` module in debug mode: - -```bash -./python.d.plugin boinc debug trace -``` - +integrations/boinc.md

\ No newline at end of file diff --git a/collectors/python.d.plugin/boinc/integrations/boinc.md b/collectors/python.d.plugin/boinc/integrations/boinc.md new file mode 100644 index 000000000..961f79537 --- /dev/null +++ b/collectors/python.d.plugin/boinc/integrations/boinc.md @@ -0,0 +1,203 @@ +<!--startmeta +custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/boinc/README.md" +meta_yaml: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/boinc/metadata.yaml" +sidebar_label: "BOINC" +learn_status: "Published" +learn_rel_path: "Data Collection/Distributed Computing Systems" +message: "DO NOT EDIT THIS FILE DIRECTLY, IT IS GENERATED BY THE COLLECTOR'S metadata.yaml FILE" +endmeta--> + +# BOINC + + +<img src="https://netdata.cloud/img/bolt.svg" width="150"/> + + +Plugin: python.d.plugin +Module: boinc + +<img src="https://img.shields.io/badge/maintained%20by-Netdata-%2300ab44" /> + +## Overview + +This collector monitors task counts for the Berkeley Open Infrastructure Networking Computing (BOINC) distributed computing client. + +It uses the same RPC interface that the BOINC monitoring GUI does. + +This collector is supported on all platforms. + +This collector supports collecting metrics from multiple instances of this integration, including remote instances. + + +### Default Behavior + +#### Auto-Detection + +By default, the module will try to auto-detect the password to the RPC interface by looking in `/var/lib/boinc` for this file (this is the location most Linux distributions use for a system-wide BOINC installation), so things may just work without needing configuration for a local system. + +#### Limits + +The default configuration for this integration does not impose any limits on data collection. + +#### Performance Impact + +The default configuration for this integration is not expected to impose a significant performance impact on the system. + + +## Metrics + +Metrics grouped by *scope*. + +The scope defines the instance that the metric belongs to. An instance is uniquely identified by a set of labels. + + + +### Per BOINC instance + +These metrics refer to the entire monitored application. + +This scope has no labels. + +Metrics: + +| Metric | Dimensions | Unit | +|:------|:----------|:----| +| boinc.tasks | Total, Active | tasks | +| boinc.states | New, Downloading, Ready to Run, Compute Errors, Uploading, Uploaded, Aborted, Failed Uploads | tasks | +| boinc.sched | Uninitialized, Preempted, Scheduled | tasks | +| boinc.process | Uninitialized, Executing, Suspended, Aborted, Quit, Copy Pending | tasks | + + + +## Alerts + + +The following alerts are available: + +| Alert name | On metric | Description | +|:------------|:----------|:------------| +| [ boinc_total_tasks ](https://github.com/netdata/netdata/blob/master/health/health.d/boinc.conf) | boinc.tasks | average number of total tasks over the last 10 minutes | +| [ boinc_active_tasks ](https://github.com/netdata/netdata/blob/master/health/health.d/boinc.conf) | boinc.tasks | average number of active tasks over the last 10 minutes | +| [ boinc_compute_errors ](https://github.com/netdata/netdata/blob/master/health/health.d/boinc.conf) | boinc.states | average number of compute errors over the last 10 minutes | +| [ boinc_upload_errors ](https://github.com/netdata/netdata/blob/master/health/health.d/boinc.conf) | boinc.states | average number of failed uploads over the last 10 minutes | + + +## Setup + +### Prerequisites + +#### Boinc RPC interface + +BOINC requires use of a password to access it's RPC interface. You can find this password in the `gui_rpc_auth.cfg` file in your BOINC directory. + + +### Configuration + +#### File + +The configuration file name for this integration is `python.d/boinc.conf`. + + +You can edit the configuration file using the `edit-config` script from the +Netdata [config directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md#the-netdata-config-directory). + +```bash +cd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata +sudo ./edit-config python.d/boinc.conf +``` +#### Options + +There are 2 sections: + +* Global variables +* One or more JOBS that can define multiple different instances to monitor. + +The following options can be defined globally: priority, penalty, autodetection_retry, update_every, but can also be defined per JOB to override the global values. + +Additionally, the following collapsed table contains all the options that can be configured inside a JOB definition. + +Every configuration JOB starts with a `job_name` value which will appear in the dashboard, unless a `name` parameter is specified. + + +<details><summary>Config options</summary> + +| Name | Description | Default | Required | +|:----|:-----------|:-------|:--------:| +| update_every | Sets the default data collection frequency. | 5 | False | +| priority | Controls the order of charts at the netdata dashboard. | 60000 | False | +| autodetection_retry | Sets the job re-check interval in seconds. | 0 | False | +| penalty | Indicates whether to apply penalty to update_every in case of failures. | yes | False | +| name | Job name. This value will overwrite the `job_name` value. JOBS with the same name are mutually exclusive. Only one of them will be allowed running at any time. This allows autodetection to try several alternatives and pick the one that works. | | False | +| hostname | Define a hostname where boinc is running. | localhost | False | +| port | The port of boinc RPC interface. | | False | +| password | Provide a password to connect to a boinc RPC interface. | | False | + +</details> + +#### Examples + +##### Configuration of a remote boinc instance + +A basic JOB configuration for a remote boinc instance + +```yaml +remote: + hostname: '1.2.3.4' + port: 1234 + password: 'some-password' + +``` +##### Multi-instance + +> **Note**: When you define multiple jobs, their names must be unique. + +Collecting metrics from local and remote instances. + + +<details><summary>Config</summary> + +```yaml +localhost: + name: 'local' + host: '127.0.0.1' + port: 1234 + password: 'some-password' + +remote_job: + name: 'remote' + host: '192.0.2.1' + port: 1234 + password: some-other-password + +``` +</details> + + + +## Troubleshooting + +### Debug Mode + +To troubleshoot issues with the `boinc` collector, run the `python.d.plugin` with the debug option enabled. The output +should give you clues as to why the collector isn't working. + +- Navigate to the `plugins.d` directory, usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case on + your system, open `netdata.conf` and look for the `plugins` setting under `[directories]`. + + ```bash + cd /usr/libexec/netdata/plugins.d/ + ``` + +- Switch to the `netdata` user. + + ```bash + sudo -u netdata -s + ``` + +- Run the `python.d.plugin` to debug the collector: + + ```bash + ./python.d.plugin boinc debug trace + ``` + + diff --git a/collectors/python.d.plugin/ceph/README.md b/collectors/python.d.plugin/ceph/README.md index 555491ad7..654248b70 100644..120000 --- a/collectors/python.d.plugin/ceph/README.md +++ b/collectors/python.d.plugin/ceph/README.md @@ -1,71 +1 @@ -<!-- -title: "CEPH monitoring with Netdata" -custom_edit_url: "https://github.com/netdata/netdata/edit/master/collectors/python.d.plugin/ceph/README.md" -sidebar_label: "CEPH" -learn_status: "Published" -learn_topic_type: "References" -learn_rel_path: "Integrations/Monitor/Storage" ---> - -# CEPH collector - -Monitors the ceph cluster usage and consumption data of a server, and produces: - -- Cluster statistics (usage, available, latency, objects, read/write rate) -- OSD usage -- OSD latency -- Pool usage -- Pool read/write operations -- Pool read/write rate -- number of objects per pool - -## Requirements - -- `rados` python module -- Granting read permissions to ceph group from keyring file - -```shell -# chmod 640 /etc/ceph/ceph.client.admin.keyring -``` - -## Configuration - -Edit the `python.d/ceph.conf` configuration file using `edit-config` from the Netdata [config -directory](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md), which is typically at `/etc/netdata`. - -```bash -cd /etc/netdata # Replace this path with your Netdata config directory, if different -sudo ./edit-config python.d/ceph.conf -``` - -Sample: - -```yaml -local: - config_file: '/etc/ceph/ceph.conf' - keyring_file: '/etc/ceph/ceph.client.admin.keyring' -``` - - - - -### Troubleshooting - -To troubleshoot issues with the `ceph` module, run the `python.d.plugin` with the debug option enabled. The -output will give you the output of the data collection job or error messages on why the collector isn't working. - -First, navigate to your plugins directory, usually they are located under `/usr/libexec/netdata/plugins.d/`. If that's -not the case on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the -plugin's directory, switch to the `netdata` user. - -```bash -cd /usr/libexec/netdata/plugins.d/ -sudo su -s /bin/bash netdata -``` - -Now you can manually run the `ceph` module in debug mode: - -```bash -./python.d.plugin ceph debug trace -``` - +integrations/ceph.md