diff options

Diffstat (limited to '')

| -rw-r--r-- | docs/guides/monitor-cockroachdb.md | 136 | ||||

| -rw-r--r-- | docs/guides/monitor-hadoop-cluster.md (renamed from docs/tutorials/monitor-hadoop-cluster.md) | 19 | ||||

| -rw-r--r-- | docs/guides/monitor/anomaly-detection.md | 191 | ||||

| -rw-r--r-- | docs/guides/monitor/dimension-templates.md (renamed from docs/tutorials/dimension-templates.md) | 25 | ||||

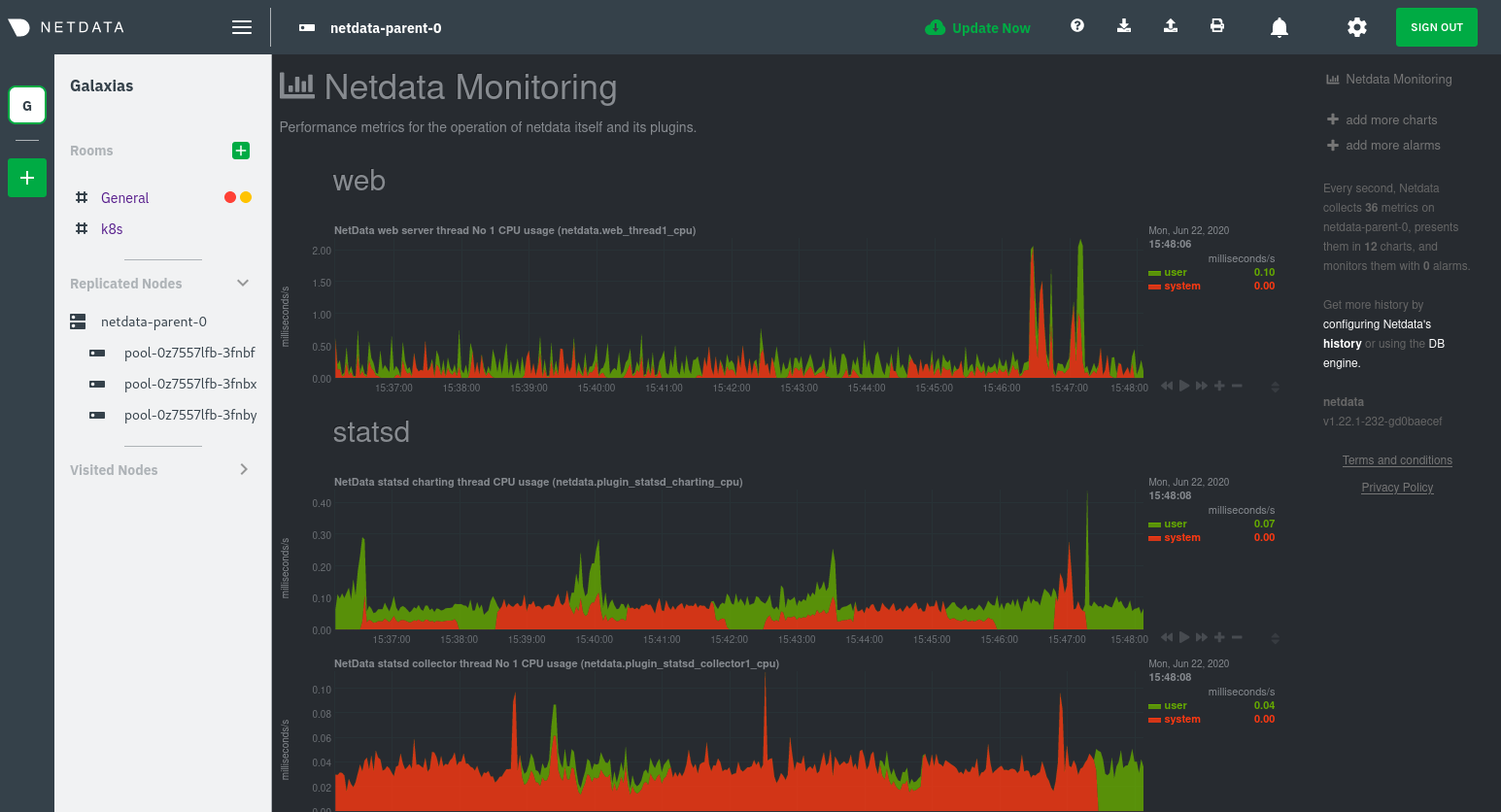

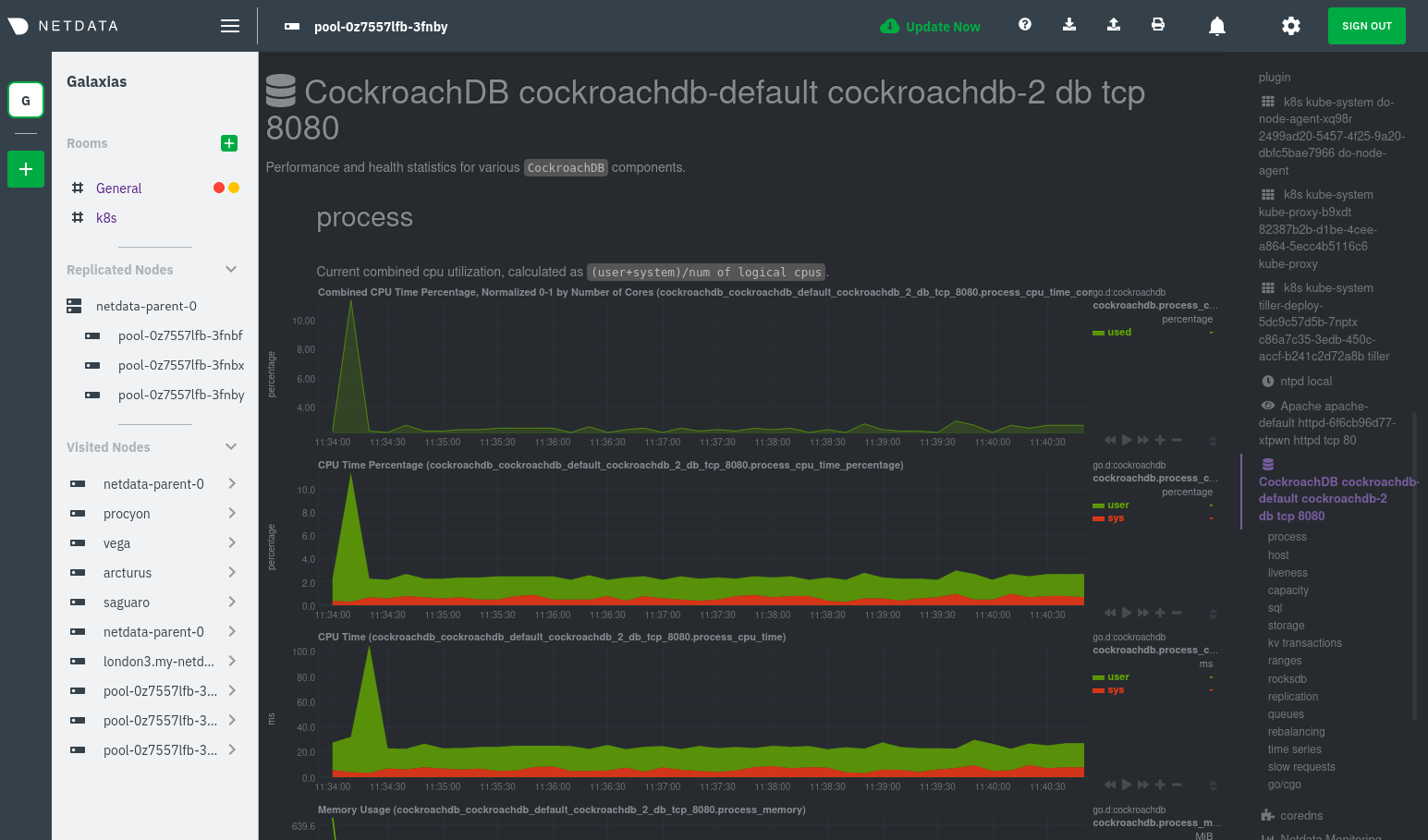

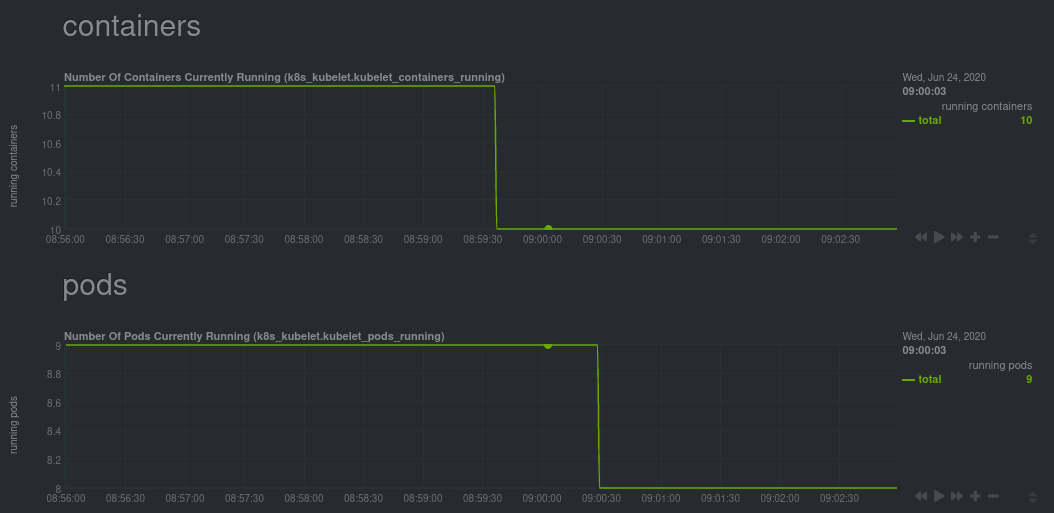

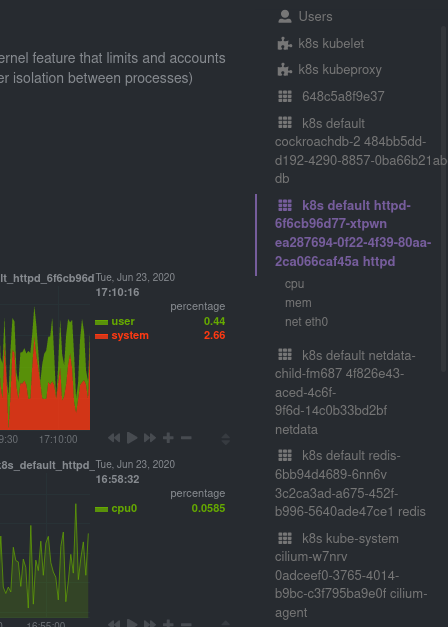

| -rw-r--r-- | docs/guides/monitor/kubernetes-k8s-netdata.md | 278 | ||||

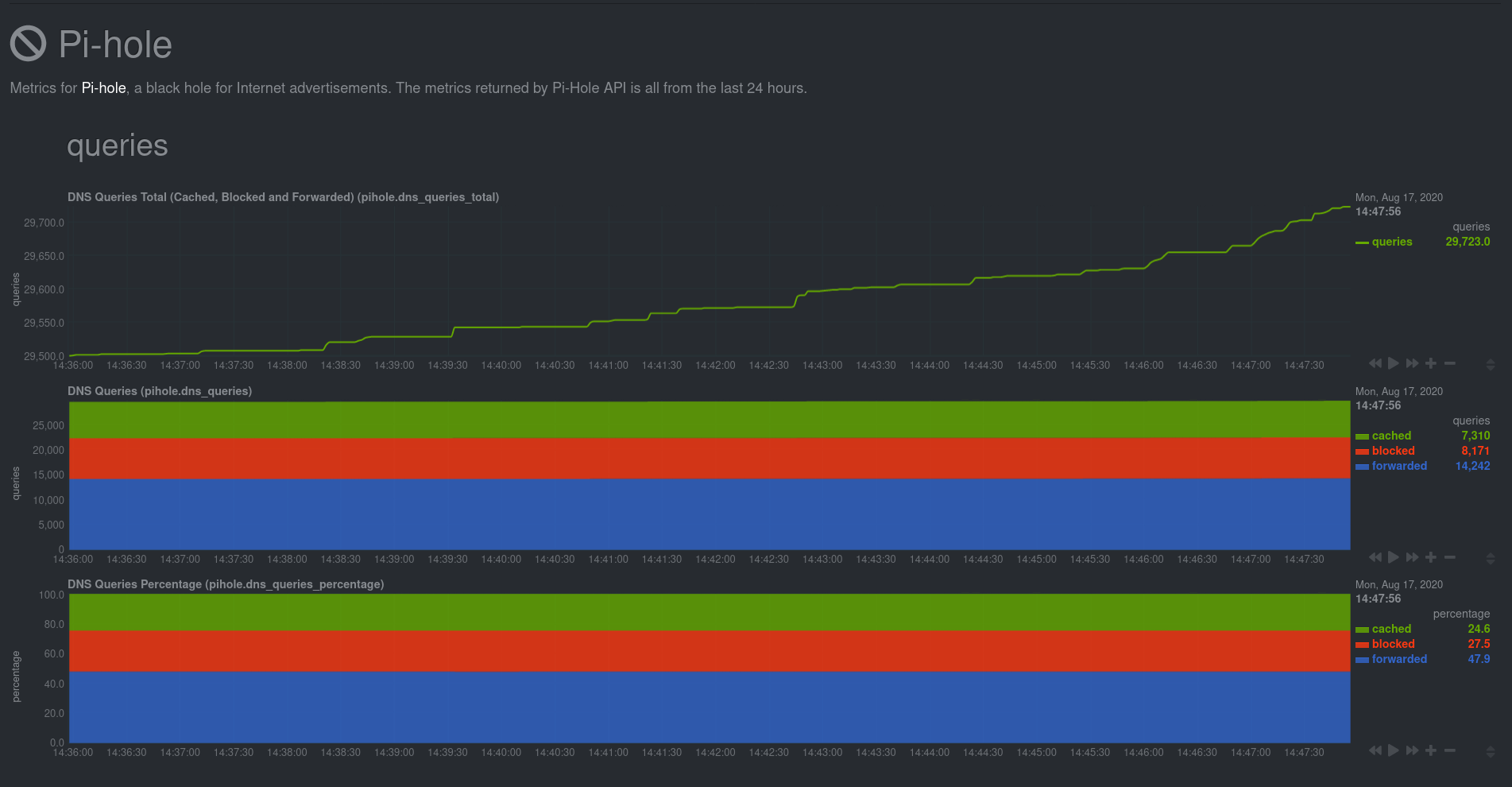

| -rw-r--r-- | docs/guides/monitor/pi-hole-raspberry-pi.md | 163 | ||||

| -rw-r--r-- | docs/guides/monitor/process.md | 299 | ||||

| -rw-r--r-- | docs/guides/monitor/stop-notifications-alarms.md | 92 | ||||

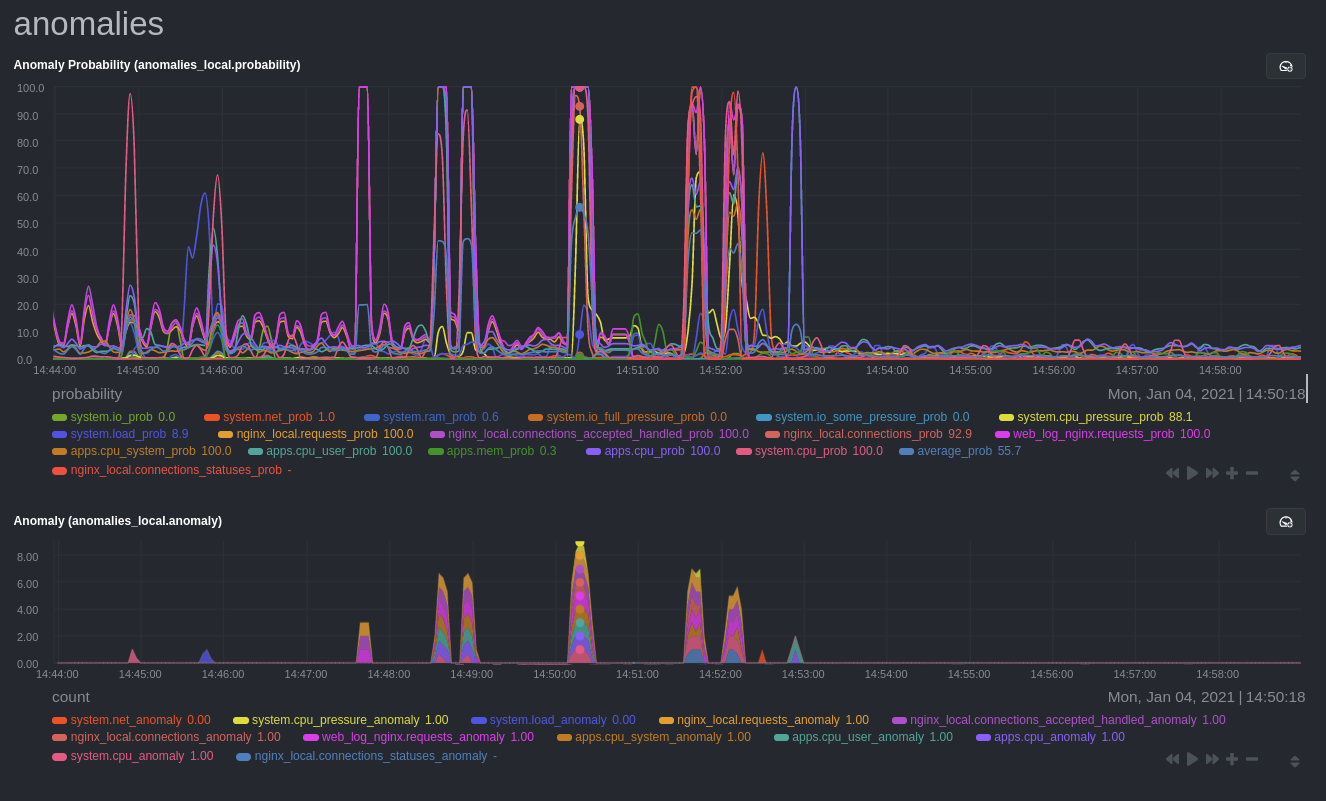

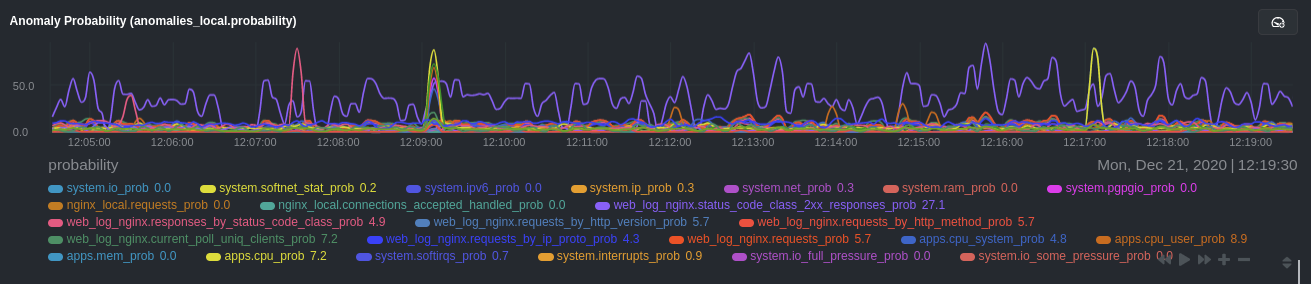

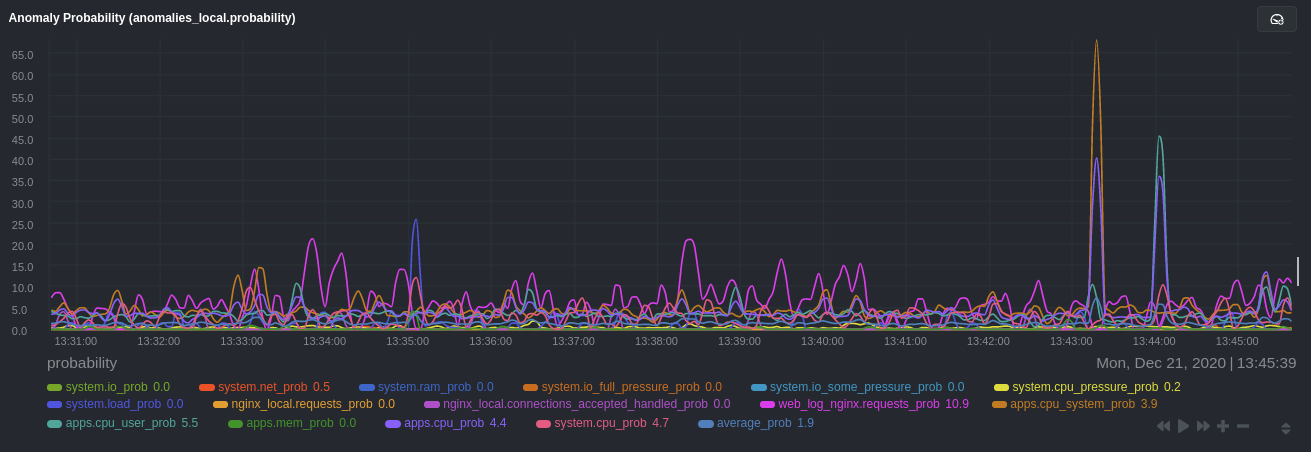

| -rw-r--r-- | docs/guides/monitor/visualize-monitor-anomalies.md | 147 |

9 files changed, 1333 insertions, 17 deletions