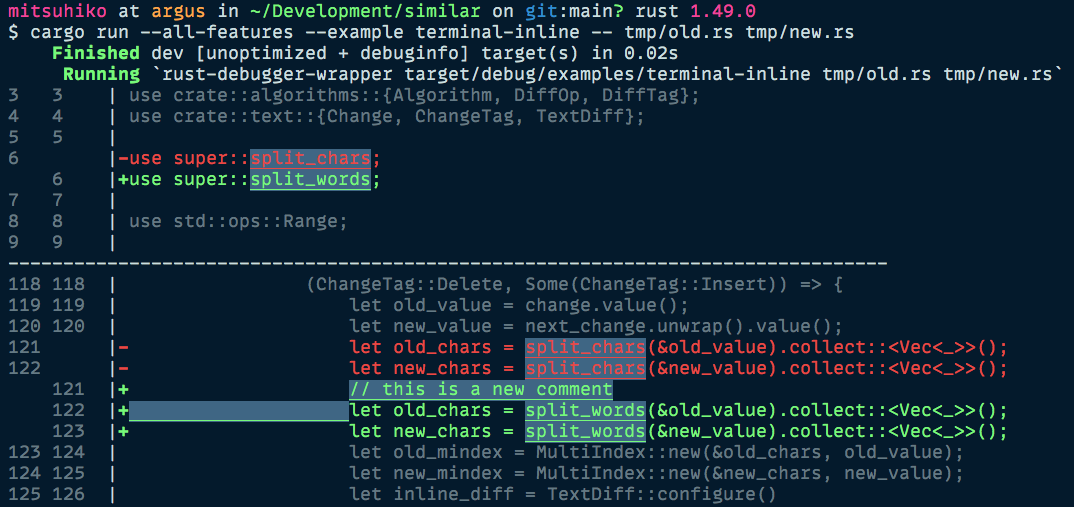

diff options

Diffstat (limited to 'vendor/similar')

65 files changed, 8063 insertions, 0 deletions

diff --git a/vendor/similar/.cargo-checksum.json b/vendor/similar/.cargo-checksum.json new file mode 100644 index 0000000..c9c194d --- /dev/null +++ b/vendor/similar/.cargo-checksum.json @@ -0,0 +1 @@ +{"files":{},"package":"2aeaf503862c419d66959f5d7ca015337d864e9c49485d771b732e2a20453597"}