diff options

| author | Daniel Baumann <daniel.baumann@progress-linux.org> | 2023-10-17 09:30:23 +0000 |

|---|---|---|

| committer | Daniel Baumann <daniel.baumann@progress-linux.org> | 2023-10-17 09:30:23 +0000 |

| commit | 517a443636daa1e8085cb4e5325524a54e8a8fd7 (patch) | |

| tree | 5352109cc7cd5122274ab0cfc1f887b685f04edf /collectors/systemd-journal.plugin | |

| parent | Releasing debian version 1.42.4-1. (diff) | |

| download | netdata-517a443636daa1e8085cb4e5325524a54e8a8fd7.tar.xz netdata-517a443636daa1e8085cb4e5325524a54e8a8fd7.zip | |

Merging upstream version 1.43.0.

Signed-off-by: Daniel Baumann <daniel.baumann@progress-linux.org>

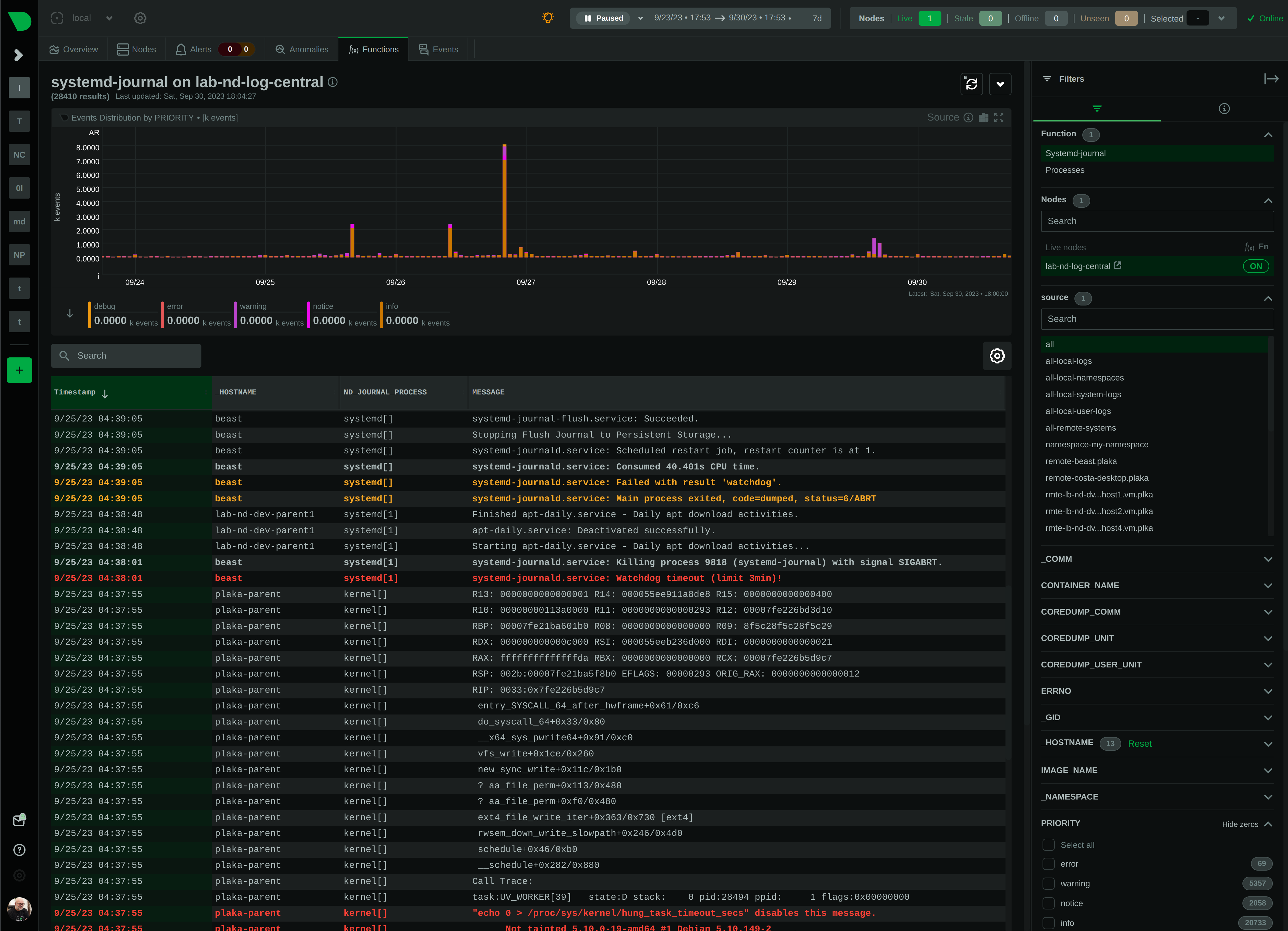

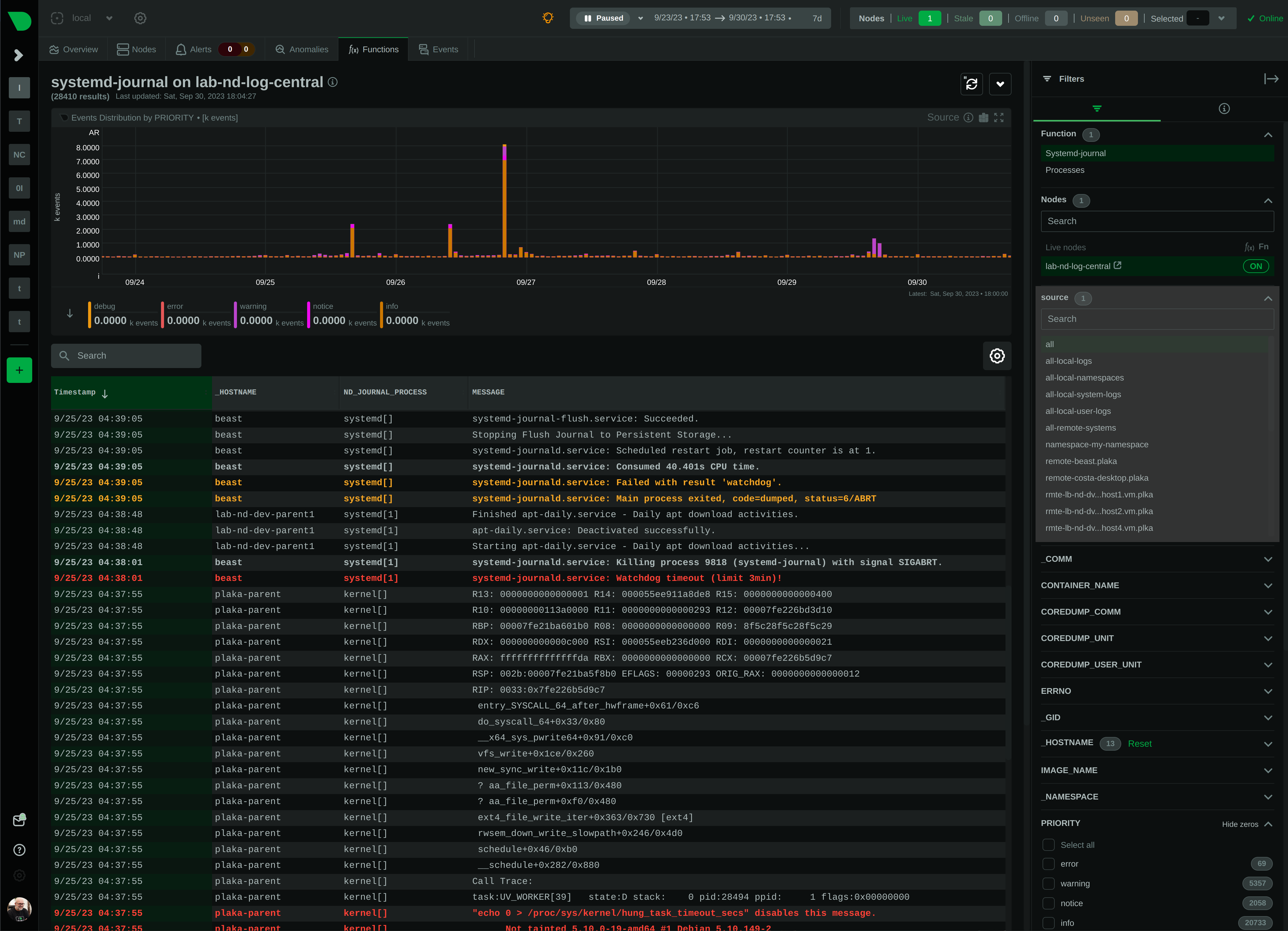

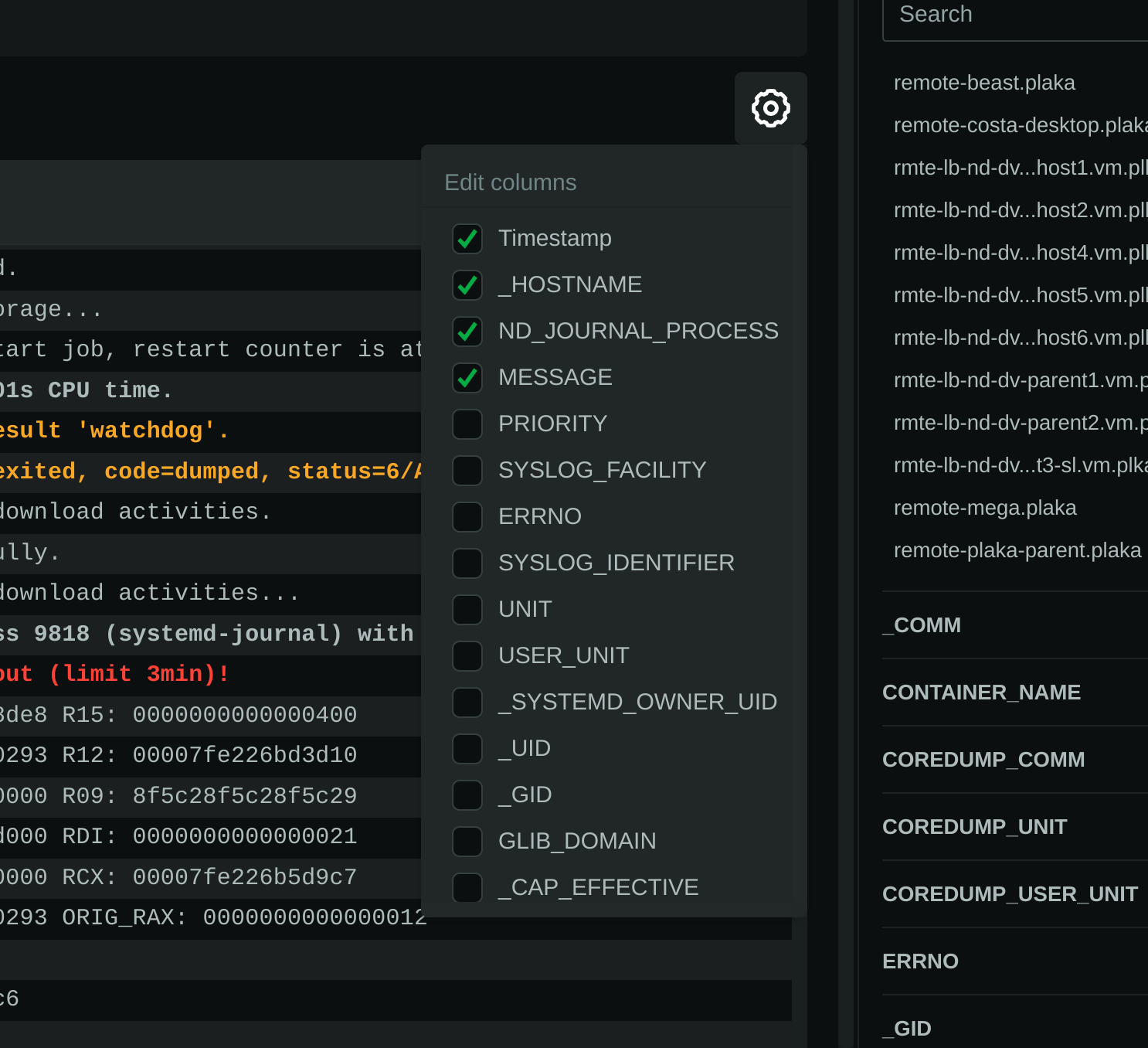

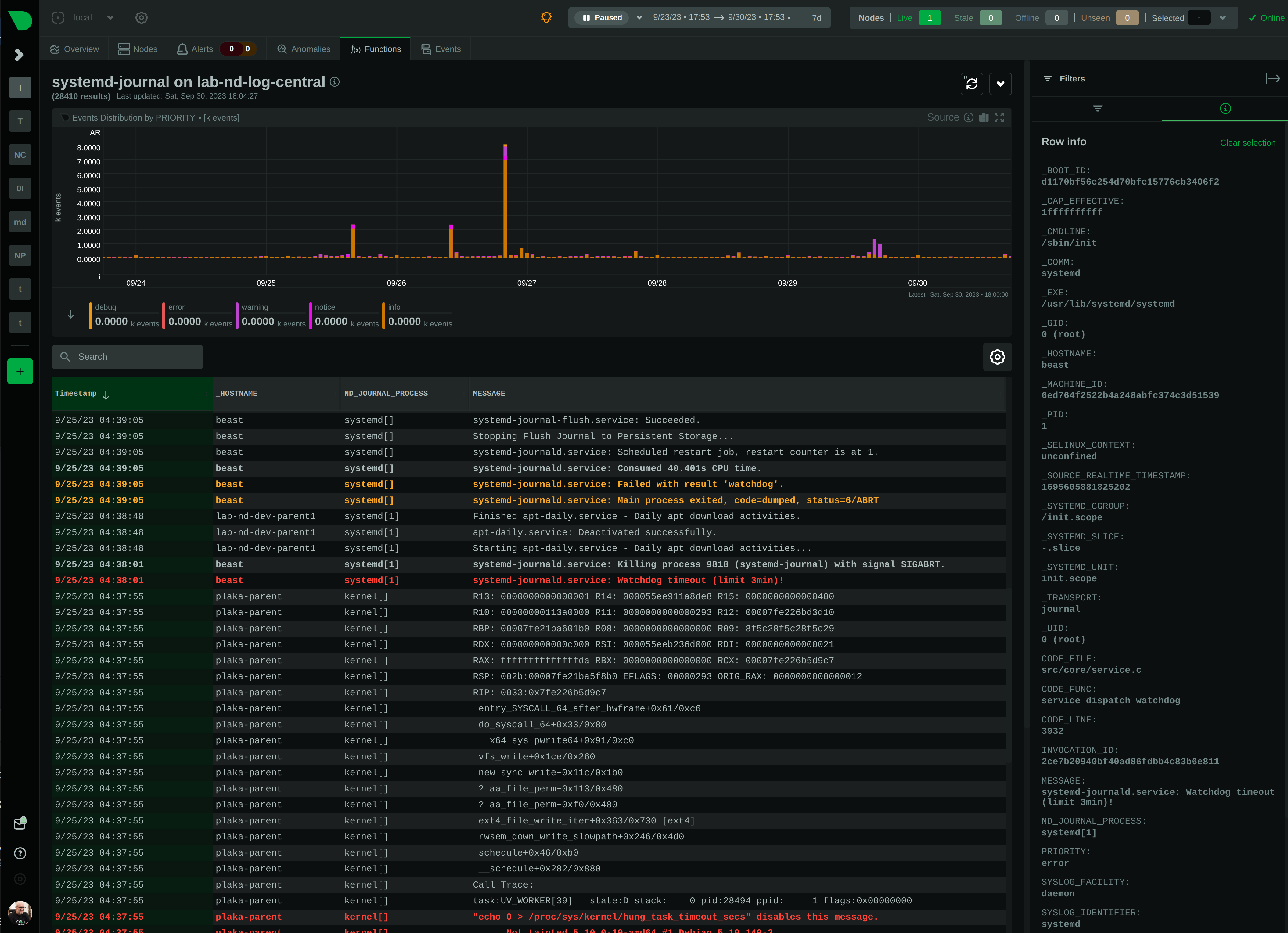

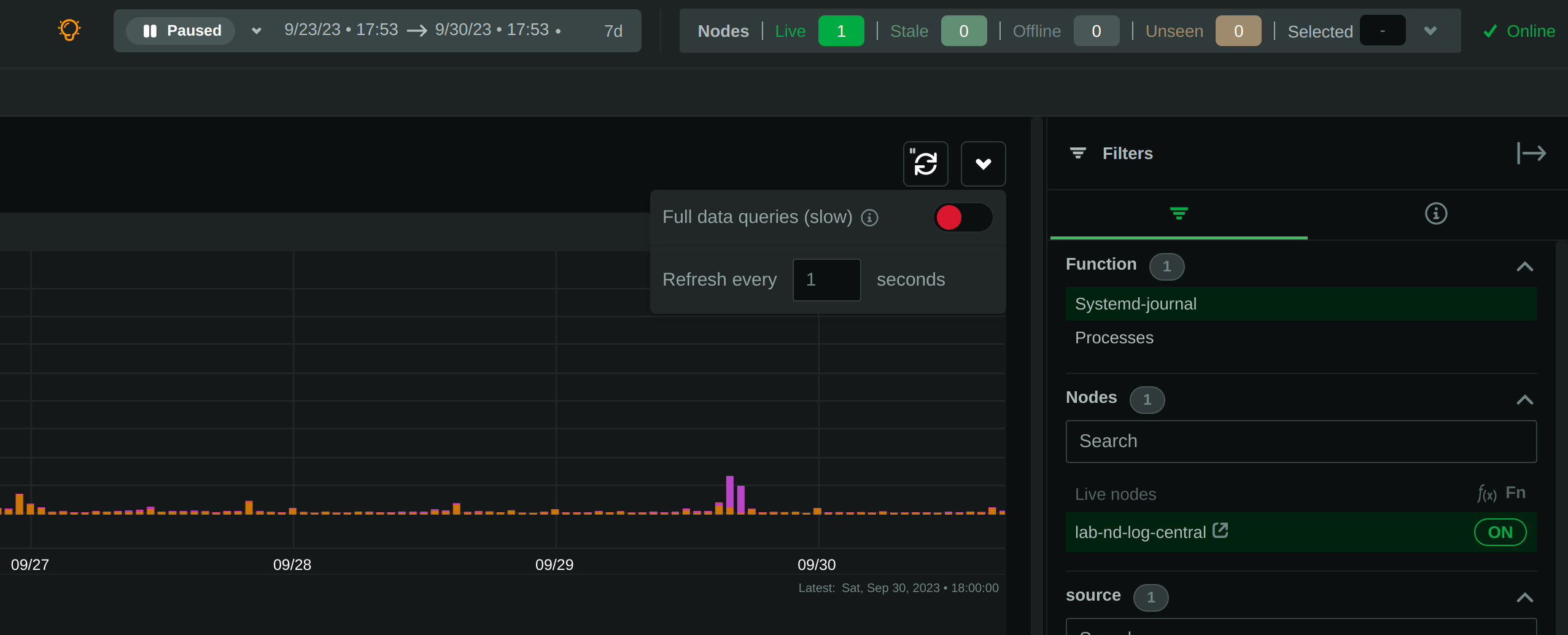

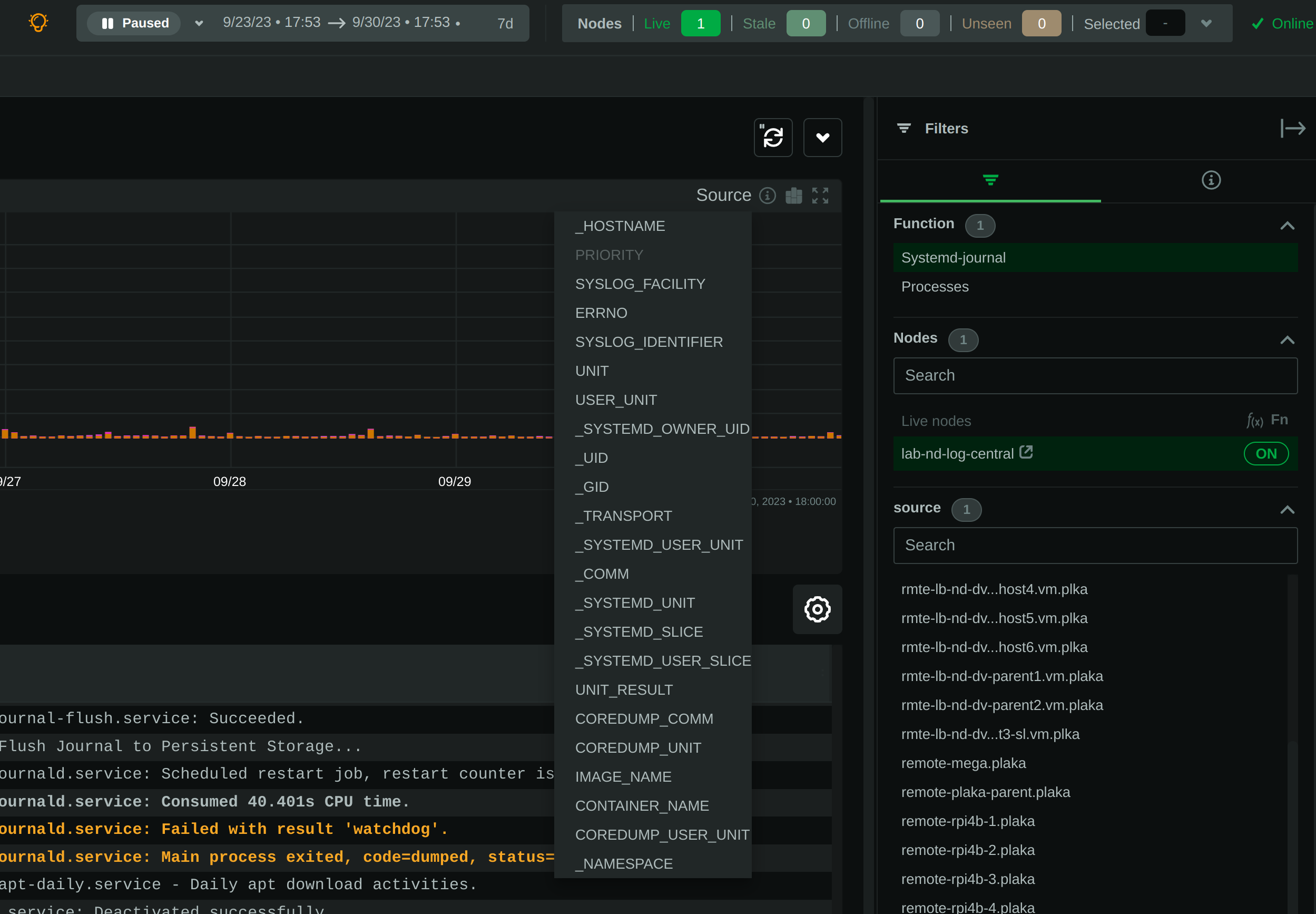

Diffstat (limited to 'collectors/systemd-journal.plugin')

| -rw-r--r-- | collectors/systemd-journal.plugin/README.md | 673 | ||||

| -rw-r--r-- | collectors/systemd-journal.plugin/systemd-journal.c | 2664 |

2 files changed, 3100 insertions, 237 deletions