diff options

| author | Daniel Baumann <daniel.baumann@progress-linux.org> | 2021-02-07 11:49:00 +0000 |

|---|---|---|

| committer | Daniel Baumann <daniel.baumann@progress-linux.org> | 2021-02-07 12:42:05 +0000 |

| commit | 2e85f9325a797977eea9dfea0a925775ddd211d9 (patch) | |

| tree | 452c7f30d62fca5755f659b99e4e53c7b03afc21 /docs | |

| parent | Releasing debian version 1.19.0-4. (diff) | |

| download | netdata-2e85f9325a797977eea9dfea0a925775ddd211d9.tar.xz netdata-2e85f9325a797977eea9dfea0a925775ddd211d9.zip | |

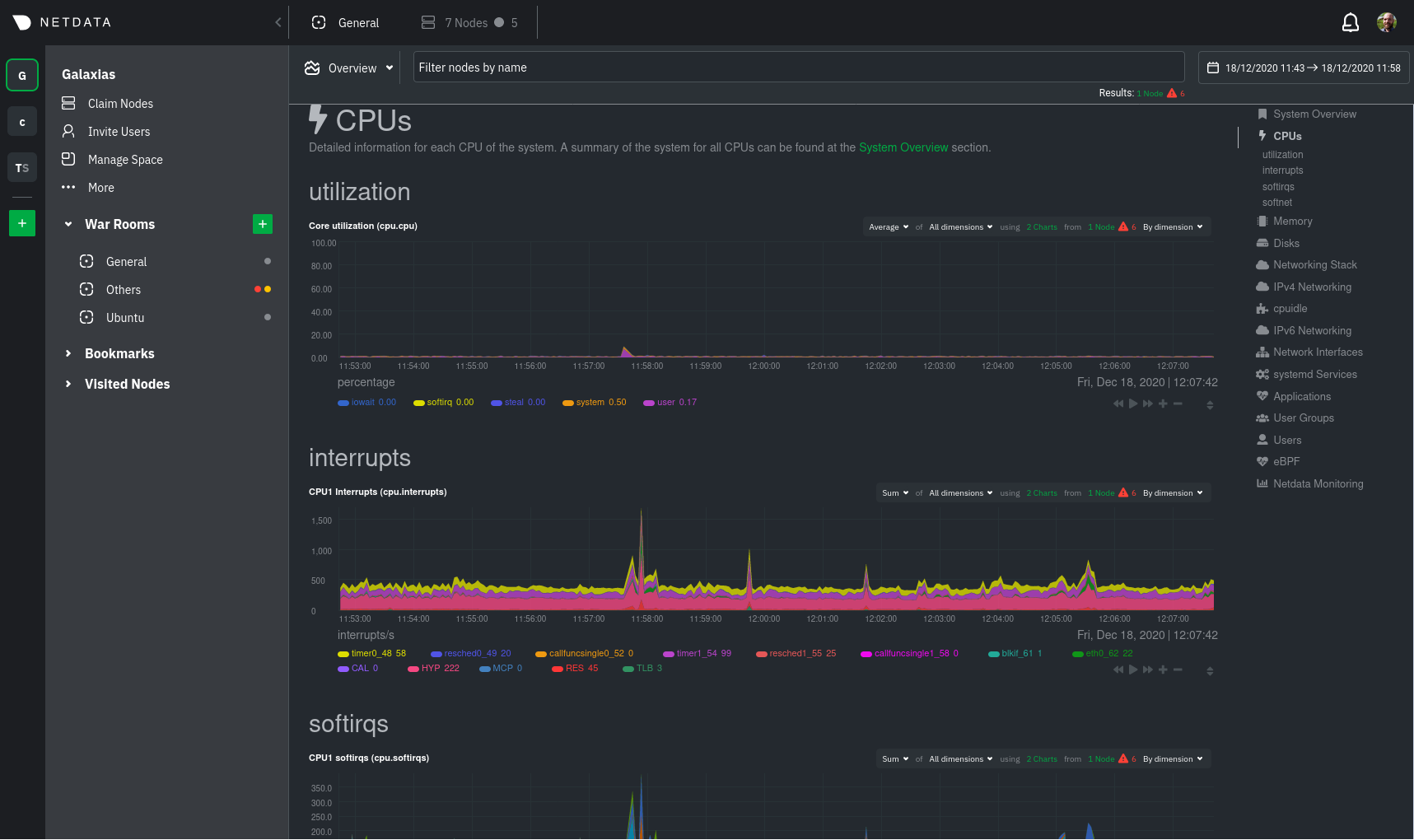

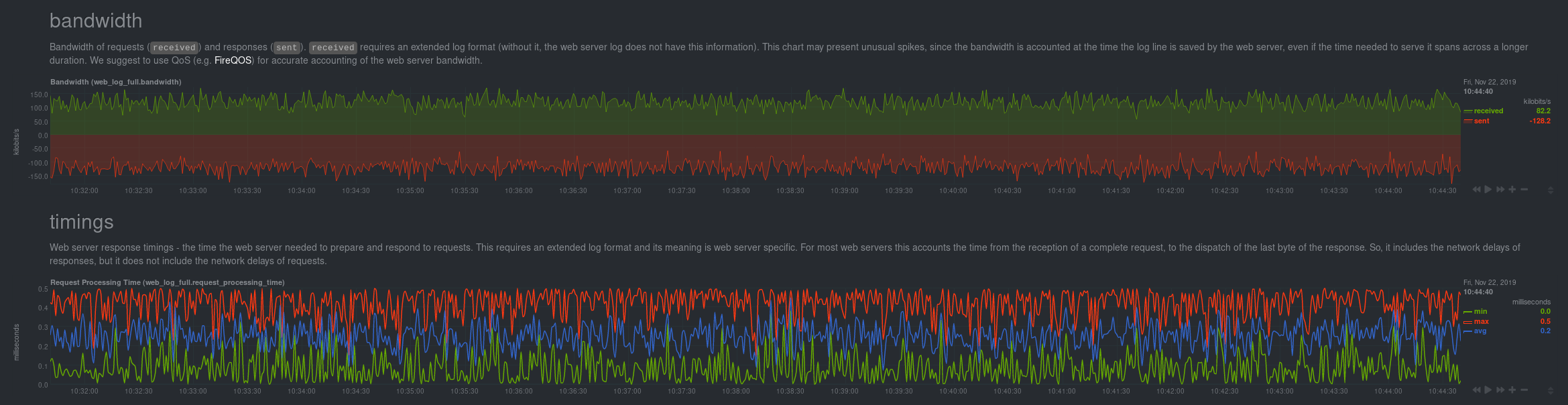

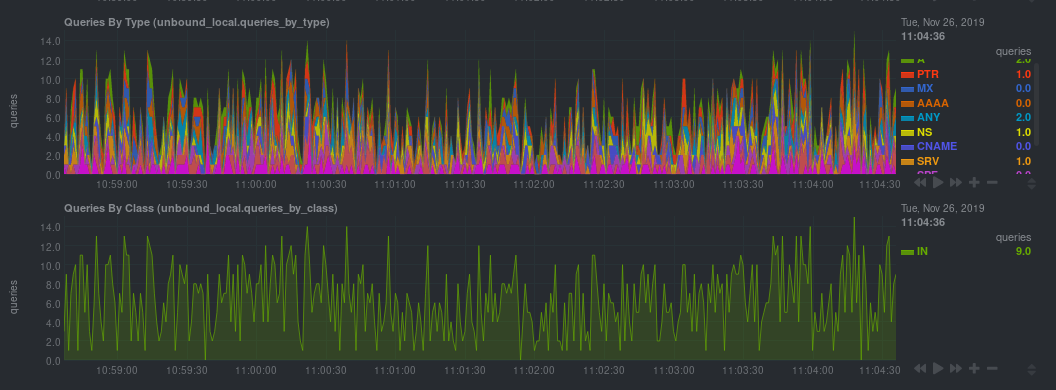

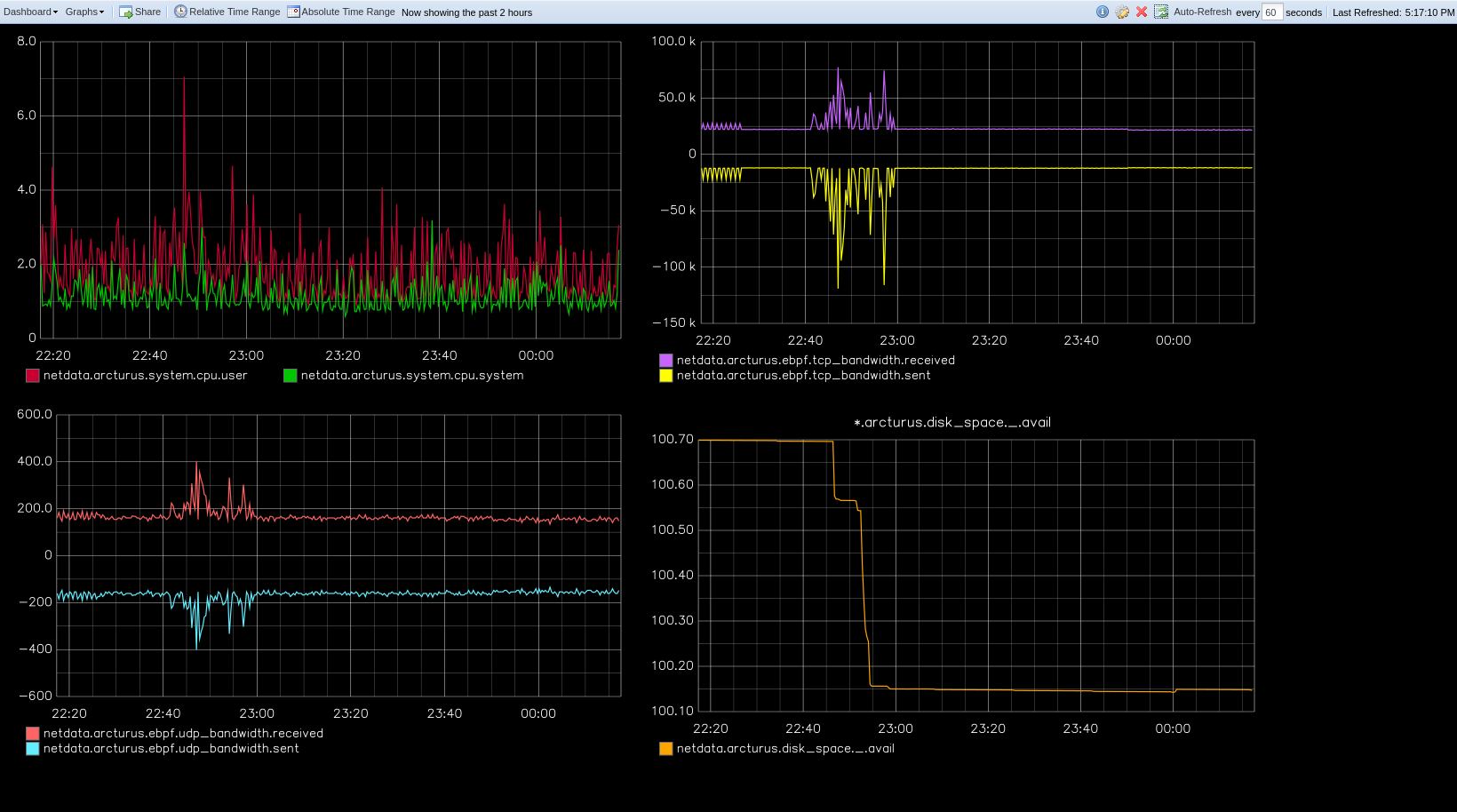

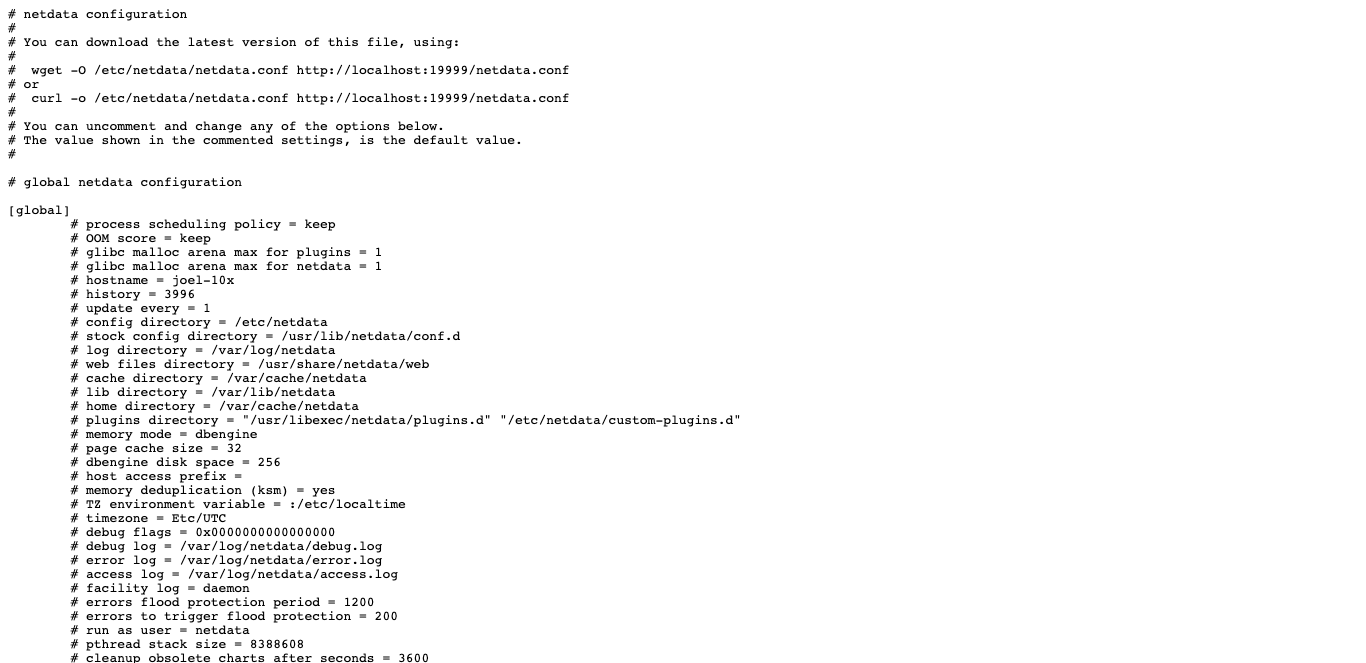

Merging upstream version 1.29.0.

Signed-off-by: Daniel Baumann <daniel.baumann@progress-linux.org>

Diffstat (limited to 'docs')

97 files changed, 8402 insertions, 3367 deletions